When critical services fail, businesses risk losing revenue, productivity, and trust. That’s why Google Cloud customers running SAP applications choose to deploy high availability (HA) systems on Google Cloud.

In these deployments Linux operating system clustering provides application and guest awareness for the application state and automates recovery actions in case of failure — including cluster node, resource or node failover or failed action.

From our partners:

Pacemaker is the most popular software Linux administrators use to manage their HA clusters, which includes automating notifications about events — including failover fencing and node, attribute, and resource events — and reporting on events. With automated alerts and reports, Linux administrators can not only learn about events as they happen, but they can also make sure other stakeholders are alerted to take action when critical events occur. They can even discover past events to assess the overall health of their HA systems.

Here, we break down the steps to setting up automated alerts for HA cluster events and alert reporting.

How to Deploy the Alert Script

To set up event-based alerts, you’ll need to take the following steps to execute the script.

1. Download the script file ‘gcp_crm_alert.sh’ from https://github.com/GoogleCloudPlatform/pacemaker-alerts-cloud-logging

2. Under root user, add exec flag for the script and execute deployment with:

chmod +x ./gcp_crm_alert.sh./gcp_crm_alert.sh -d

3. Confirm that the deployment runs successfully. If it does, you will see the following INFO log messages:

- In the Red Hat Enterprise Linux (RHEL) system:

gcp_crm_alert.sh:2022-01-24T23:48:30+0000:INFO:'pcs alert recipient add gcp_cluster_alert value=gcp_cluster_alerts id=gcp_cluster_alert_recepient options value=/var/log/crm_alerts_log' rc=0

- In the SUSE Linux Enterprise Server (SLES):

gcp_crm_alert.sh:2022-01-25T00:13:27+00:00:INFO:'crm configure alert gcp_cluster_alert /usr/share/pacemaker/alerts/gcp_crm_alert.sh meta timeout=10s timestamp-format=%Y-%m-%dT%H:%M:%S.%06NZ to { /var/log/crm_alerts_log attributes gcloud_timeout=5 gcloud_cmd=/usr/bin/gcloud }' rc=0

Now, in the event of a cluster node, resource, node failover, or failed action, Pacemaker will start the alert mechanism. For further details on the alerting agent, check out the Pacemaker Explained documentation.

How to Use Cloud Logging for Alert Reporting

Alerted events are published in Cloud Logging. Below is an example of the log record payload, where the cluster alert key-value pairs get recorded in the jsonPayload node.

{"insertId": "ktildwg1o3fbim","jsonPayload": {"CRM_alert_recipient": "/var/log/crm_alerts_log","CRM_alert_attribute_name": "","CRM_alert_kind": "resource","CRM_alert_status": "0","CRM_alert_rsc": "STONITH-sapecc-scs","CRM_alert_rc": "0","CRM_alert_timestamp_usec": "","CRM_alert_interval": "0","CRM_alert_node_sequence": "21","CRM_alert_task": "start","CRM_alert_nodeid": "","CRM_alert_timestamp": "2022-01-25T00:17:06.515313Z","CRM_alert_timestamp_epoch": "","CRM_alert_desc": "ok","CRM_alert_target_rc": "0","CRM_alert_version": "1.1.15","CRM_alert_attribute_value": "","CRM_alert_node": "sapecc-ers","CRM_alert_exec_time": ""},"resource": {"type": "global","labels": {"project_id": "gcp-tse-sap-on-gcp-lab"}},"timestamp": "2022-01-25T00:17:09.662557309Z","severity": "INFO","logName": "projects/gcp-tse-sap-on-gcp-lab/logs/sapecc-ers%2F%2Fvar%2Flog%2Fcrm_alerts_log","receiveTimestamp": "2022-01-25T00:17:09.662557309Z"}

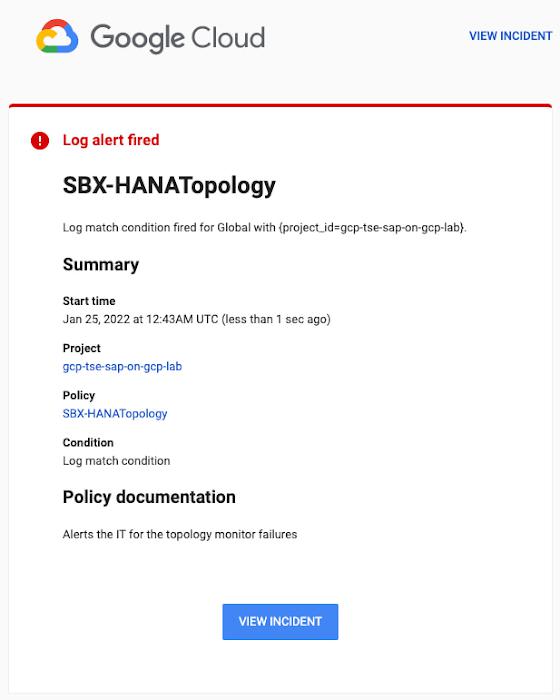

To get notified of a resource event — for example, when the HANA topology resource monitor fails — you can use the following filter for the alerting definition:

jsonPayload.CRM_alert_node=("hana-venus" OR "hana-mercury")-jsonPayload.CRM_alert_status="0"jsonPayload.CRM_alert_rsc="rsc_SAPHanaTopology_SBX_HDB00"jsonPayload.CRM_alert_task="monitor"

To define an alert for a fencing event, your can apply this filter:

jsonPayload.CRM_alert_node=("hana-venus" OR "hana-mercury")jsonPayload.CRM_alert_kind="fencing"

The fencing log entry gets recorded with warning severity to give you deeper insight, and this additional information is also helpful for more specific filtering criteria:

{"insertId": "1plznskfjsxt82","jsonPayload": {"CRM_alert_attribute_value": "","CRM_alert_recipient": "/var/log/crm_alerts_log","CRM_alert_rsc": "","CRM_alert_rc": "0","CRM_alert_timestamp_usec": "529261","CRM_alert_desc": "Operation reboot of hana-mercury by hana-venus for crmd.2361@hana-venus: OK (ref=2a9bf814-9adf-4247-af3f-94ac254fc3ca)","CRM_alert_target_rc": "","CRM_alert_nodeid": "","CRM_alert_kind": "fencing","CRM_alert_node_sequence": "33","CRM_alert_task": "st_notify_fence","CRM_alert_status": "","CRM_alert_exec_time": "","CRM_alert_attribute_name": "","CRM_alert_timestamp_epoch": "1643072786","CRM_alert_version": "1.1.19","CRM_alert_timestamp": "2022-01-25T01:06:26.529261Z","CRM_alert_interval": "","CRM_alert_node": "hana-mercury"},"resource": {"type": "global","labels": {"project_id": "gcp-tse-sap-on-gcp-lab"}},"timestamp": "2022-01-25T01:06:27.267017052Z","severity": "WARNING","logName": "projects/gcp-tse-sap-on-gcp-lab/logs/hana-venus%2F%2Fvar%2Flog%2Fcrm_alerts_log","receiveTimestamp": "2022-01-25T01:06:27.267017052Z"}

Alerts can be delivered through multiple channels, including text and email. Below is an example of an email notification for our earlier example, when we defined an alert for a HANA topology resource monitor failure:

You can write and apply filters to your log-based alerts to isolate certain types of incidents and analyze events over time. For example, the following script will surface a resource event occurring within a two-hour window on a specific date:

timestamp>="2022-01-25T00:00:00Z" timestamp<="2022-01-25T02:00:00Z"jsonPayload.CRM_alert_kind="resource"

With the ability to analyze these logged alerts over time, determine whether event patterns warrant any action.

[SIDEBAR]The alert script prints details in the standard output and in the log file /var/log/crm_alerts_log, and this can grow over time. We recommend that the log file is set with the Linux logrotate service in order to limit the file system space. Use the following command to create the necessary logrotate setting for the alerting log file:

cat > /etc/logrotate.d/crm_alerts_log << END-OF-FILE/var/log/crm_alerts_log {create 0660 root rootrotate 7size 10Mmissingokcompressdelaycompresscopytruncatedateextdateformat -%Y%m%d-%snotifempty}END-OF-FILE

Tips for Troubleshooting

When you first deploy your alert script, how can you tell for certain that you’ve done it correctly? Use the following commands to test it out:

- In RHEL:

pcs alert show

- In SLES:

sudo crm config show | grep -A3 gcp_cluster_alert

You should see the following if the script is correct:

In RHEL:

Alerts:Alert: gcp_cluster_alert (path=/usr/share/pacemaker/alerts/gcp_crm_alert.sh)Description: "Cluster alerting for hana-node-X"Options: gcloud_cmd=/usr/bin/gcloud gcloud_timeout=5Meta options: timeout=10s timestamp-format=%Y-%m-%dT%H:%M:%S.%06NZRecipients:Recipient: gcp_cluster_alert_recepient (value=gcp_cluster_alerts)Options: value=/var/log/crm_alerts_log

In SLES:

alert gcp_cluster_alert "/usr/share/pacemaker/alerts/gcp_crm_alert.sh" \meta timeout=10s timestamp-format="%Y-%m-%dT%H:%M:%S.%06NZ" \to "/var/log/crm_alerts_log" attributes gcloud_timeout=5 gcloud_cmd="/usr/bin/gcloud"

If the commands do not display the alerts properly, re-deploy the script.

In case there is an issue with the script, or if the Cloud Logging records are not presenting as expected, examine the script log file /var/log/crm_alerts_log. The errors and warning can be filtered with:

egrep '(ERROR|WARN)' /var/log/crm_alerts_log

Any Pacemaker alert failures will be recorded in the messages and/or Pacemaker log. To examine recent alert failures, use the following command:

egrep '(gcp_crm_alert.sh|gcp_cluster_alert)' \

/var/log/messages /var/log/pacemaker.log

Keep in mind, though, that the Pacemaker log location may be different in your system from the one in the example above.

From reactive to proactive

Your SAP applications are too critical to risk outages. The most effective way to manage high availability clusters for your SAP systems on Google Cloud is to take full advantage of Pacemaker’s alerting capabilities, so you can be proactive in ensuring your systems are healthy and available.

Learn more about running SAP on Google Cloud.

By: Tsvetomir Tsvetanov (Technical Solution Engineer Manager)

Source: Google Cloud Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!