Integrating your ArgoCD deployment with Connect Gateway and Workload Identity provides a seamless path to deploy to Kubernetes on many platforms. ArgoCD can easily be configured to centrally manage various cluster platforms including GKE clusters, Anthos clusters, and many more. This promotes consistency across your fleet, saves time in onboarding a new cluster, and simplifies your distributed RBAC model for management connectivity. Skip to steps below to configure ArgoCD for your use case.

Background

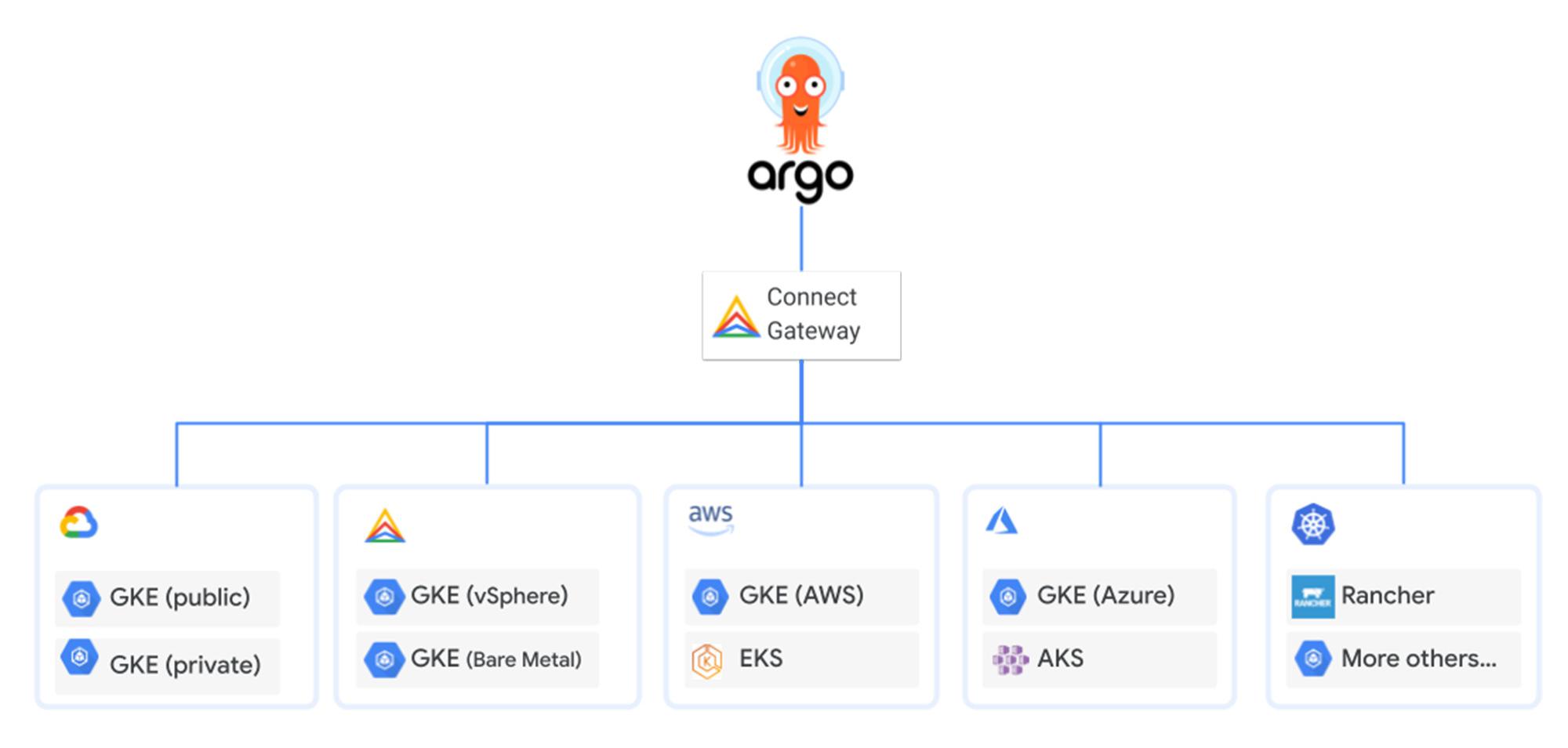

Cloud customers who choose ArgoCD as their continuous delivery tool may opt into a centralized architecture, whereby ArgoCD is hosted in a central K8s cluster and responsible for managing various distributed application clusters. These application clusters can live on many cloud platforms which are accessed via Connect Gateway to simplify ArgoCD authentication, authorization, and interfacing with the K8s API server. See the below diagram which outlines this model:

From our partners:

The default authentication behavior when adding an application cluster to ArgoCD is to use the operator’s kubeconfig for the initial control plane connection, create a local KSA in the application cluster (`argo-manager`), and escrow the KSA’s bearer token in a K8s secret. This presents two unique challenges for the pipeline administrator:

- Principal management: GCP doesn’t provide a federated Kubernetes Service Account that is scoped across multiple clusters. We have to create one KSA for each cluster, managed by ArgoCD. Each KSA is local to the control plane and requires an individual lifecycle management process, e.g. credential rotation, redundant RBAC, etc.

- Authorization: The privileged KSA secrets will be saved in the ArgoCD central cluster. This introduces operator overhead and risks to guard system security.

These two challenges are directly addressed/mitigated by using Connect Gateway and Workload Identity. The approach:

- Let’s not create a cluster admin KSA at all – instead, we’ll use a GSA and map it to the ArgoCD server and application controller workloads in the `argocd` namespace (via Workload Identity). This GSA will be authorized as a cluster admin on each application cluster in an IAM policy or K8s RBAC policy.

- The K8s secret will be replaced with a more secure mechanism that allows ArgoCD to obtain a Google Oauth token and authenticate to Google Cloud APIs. This eliminates the need for managing KSA secrets and automates rotation of the obtained Oauth token.

- The ArgoCD central cluster and managed application clusters are mutually exclusive in dependency; that is to say, these clusters don’t need to be in the same GCP project, nor require intimate network relationships, e.g. VPC Peering, SSH tunnels, HTTP proxies, bastion host, etc.

- Target application clusters can reside on different cloud platforms. The same authentication/authorization and connection models are extended to these clusters as well.

Installation Steps

First, some quick terminology. I’m going to call one of the clusters `central-cluster`, and the other `application-cluster`. The former will host the central ArgoCD deployment, the latter will be where we deploy applications/configuration.

Prerequisites:

- At least one bootstrapped GCP project containing a VPC network, subnets with sufficient secondary ranges to support a VPC native cluster, and required APIs activated to host a GKE and/or Anthos cluster.

- Two clusters deployed with Workload Identity enabled and reasonably sane defaults. I prefer Terraform based deployments and chose to use the safer-cluster module.

- If using Anthos clusters, it is assumed that they are fully deployed and registered with Fleet (thus they are reachable via Connect Gateway)

gcloudSDK must be installed, and you must be authenticated viagcloud auth login- Optional: set the PROJECT_ID environment variable in order to quickly run the

gcloudcommands below without substitution.

Identity Configuration:

In this section, we set up a GSA which is the identity used by ArgoCD when it interacts with Google Cloud.

- Create a Google service account and set required permissions

gcloud iam service-accounts create argocd-fleet-admin --project $PROJECT_IDgcloud projects add-iam-policy-binding $PROJECT_ID --member "serviceAccount:argocd-fleet-admin@${PROJECT_ID}.iam.gserviceaccount.com" --role roles/gkehub.gatewayEditor

2. Create IAM policy allowing the ArgoCD namespace/KSA to impersonate the previously created GSA

gcloud iam service-accounts add-iam-policy-binding --role roles/iam.workloadIdentityUser --member "serviceAccount:${PROJECT_ID}.svc.id.goog[argocd/argocd-server]" argocd-fleet-admin@$PROJECT_ID.iam.gserviceaccount.comgcloud iam service-accounts add-iam-policy-binding --role roles/iam.workloadIdentityUser --member "serviceAccount:${PROJECT_ID}.svc.id.goog[argocd/argocd-application-controller]" argocd-fleet-admin@$PROJECT_ID.iam.gserviceaccount.com

Application-cluster Configuration (GKE Only):

In this section, we enroll a GKE cluster into a Fleet so it can be managed by ArgoCD via Connect Gateway.

1. Activate the required APIs to enable required APIs in the desired GCP Project

gcloud services enable --project=$PROJECT_ID gkeconnect.googleapis.com gkehub.googleapis.com cloudresourcemanager.googleapis.com iam.googleapis.com connectgateway.googleapis.com

2. Grant ArgoCD permissions to access and manage the application-cluster

gcloud projects add-iam-policy-binding $PROJECT_ID --member "serviceAccount:argocd-fleet-admin@${PROJECT_ID}.iam.gserviceaccount.com" --role roles/container.admin

3. List application cluster(s) URI used for registration

gcloud container clusters list --uri --project $PROJECT_ID

4. Register the application-cluster(s) with the Fleet Project. We use gcloud SDK commands as an example here. If you prefer other tools like Terraform, please refer to this document.

gcloud container fleet memberships register $CLUSTER_NAME --gke-uri {URI from step 2} --enable-workload-identity --project $PROJECT_ID

5. View the application-cluster(s) Fleet membership

gcloud container fleet memberships list --project $PROJECT_ID

Application-cluster Configuration (non-GKE):

In this section, we discuss the steps to enroll a non-GKE cluster(e.g. Anthos-on-VMware, Anthos-on-BareMetal, etc) to ArgoCD.

Similar to the GKE scenario, the first step is to register the cluster to a Fleet project. Most recent Anthos versions already have the clusters registered when the cluster is created. If your cluster is not registered yet, refer to this guide to register it.

1. View the application-cluster(s) Fleet membership

gcloud container fleet memberships list --project $PROJECT_ID

2. Grant ArgoCD permissions to access and manage the application-cluster. The ArgoCD GSA from step 1 will need to be provisioned in `application-cluster` RBAC in order for ArgoCD to connect to and manage this cluster. Replace <CONTEXT> and <KUBECONFIG> with appropriate values.

gcloud container fleet memberships generate-gateway-rbac --membership=<MEMBERSHIP_SHOWN_IN_LAST_STEP> --users=argocd-fleet-admin@$PROJECT_ID.iam.gserviceaccount.com --role=clusterrole/cluster-admin --context=<CONTEXT> --kubeconfig=<KUBECONFIG> --apply

ArgoCD Deployment on Central-cluster:

Version 2.4.0 or higher is required. It is recommended that you use Kustomize to deploy ArgoCD to the central-cluster. This allows for the most succinct configuration patching required to enable Workload Identity and Connect Gateway.

1. Create a namespace to deploy ArgoCD in

kubectl create namespace argocd

2. GKE Cluster: If the central-cluster is hosted on GKE, annotate the argocd-server and argocd-application-controller KSAs with the GSA created in step 1. An example Kustomize overlay manifest to achieve this:

---apiVersion: v1kind: ServiceAccountmetadata:annotations:iam.gke.io/gcp-service-account: argocd-fleet-admin@$PROJECT_ID.iam.gserviceaccount.comname: argocd-application-controller---apiVersion: v1kind: ServiceAccountmetadata:annotations:iam.gke.io/gcp-service-account: argocd-fleet-admin@$PROJECT_ID.iam.gserviceaccount.comname: argocd-server

3. Anthos Cluster: If the central-cluster is hosted on an Anthos cluster, we need to configure Workload Identity as referenced here. The prerequisite is the central-cluster must be registered to a Fleet project. Based on this condition, the ArgoCD workloads can be configured to impersonate the desired GSA. An example Kustomize overlay manifest to achieve this can be found on this repo.

4. Optional: Expose the ArgoCD server externally (or internally) with a service type LoadBalancer or with an Ingress:

apiVersion: v1kind: Servicemetadata:name: argocd-serverspec:type: LoadBalancer

5. Deploy ArgoCD to the central-cluster argocd namespace created in step 1 using your preferred tooling. An example using Kustomize, where overlays/demo is the path containing overlay manifests:

kubectl apply -k overlays/demo

Testing ArgoCD:

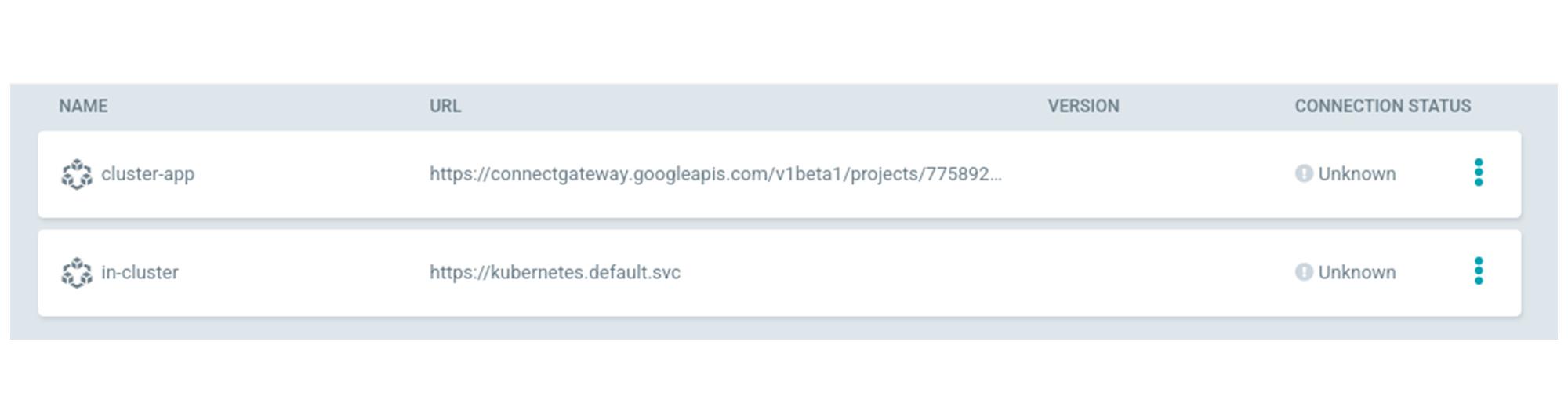

You should now have a functioning ArgoCD deployment with its UI optionally exposed externally and/or internally. The following steps will add the application cluster as an ArgoCD “external cluster”, and confirm that the integration is working as expected.

1. Create a cluster type secret representing your application cluster and apply to the central cluster argocd namespace (e.g. kubectl apply -f cluster-secret.yaml -n argocd)

apiVersion: v1kind: Secretmetadata:name: cluster-applabels:argocd.argoproj.io/secret-type: clustertype: OpaquestringData:name: cluster-appserver: https://connectgateway.googleapis.com/v1beta1/projects/$PROJECT_NUMBER/locations/global/gkeMemberships/$CLUSTER_REGISTRATION_NAMEconfig: |{"execProviderConfig": {"command": "argocd-k8s-auth","args": ["gcp"],"apiVersion": "client.authentication.k8s.io/v1beta1"},"tlsClientConfig": {"insecure": false,"caData": ""}}

Note: This step is best suited for an IaC pipeline that is responsible for the application cluster lifecycle. This secret should be dynamically generated and applied to the central-cluster once an application cluster has been successfully created and/or modified. Likewise, the secret should be deleted from the central-cluster once an application cluster is deleted.

2. Login to the ArgoCD console to confirm that the application cluster has been successfully added. The below command can be used to acquire the initial admin password for ArgoCD

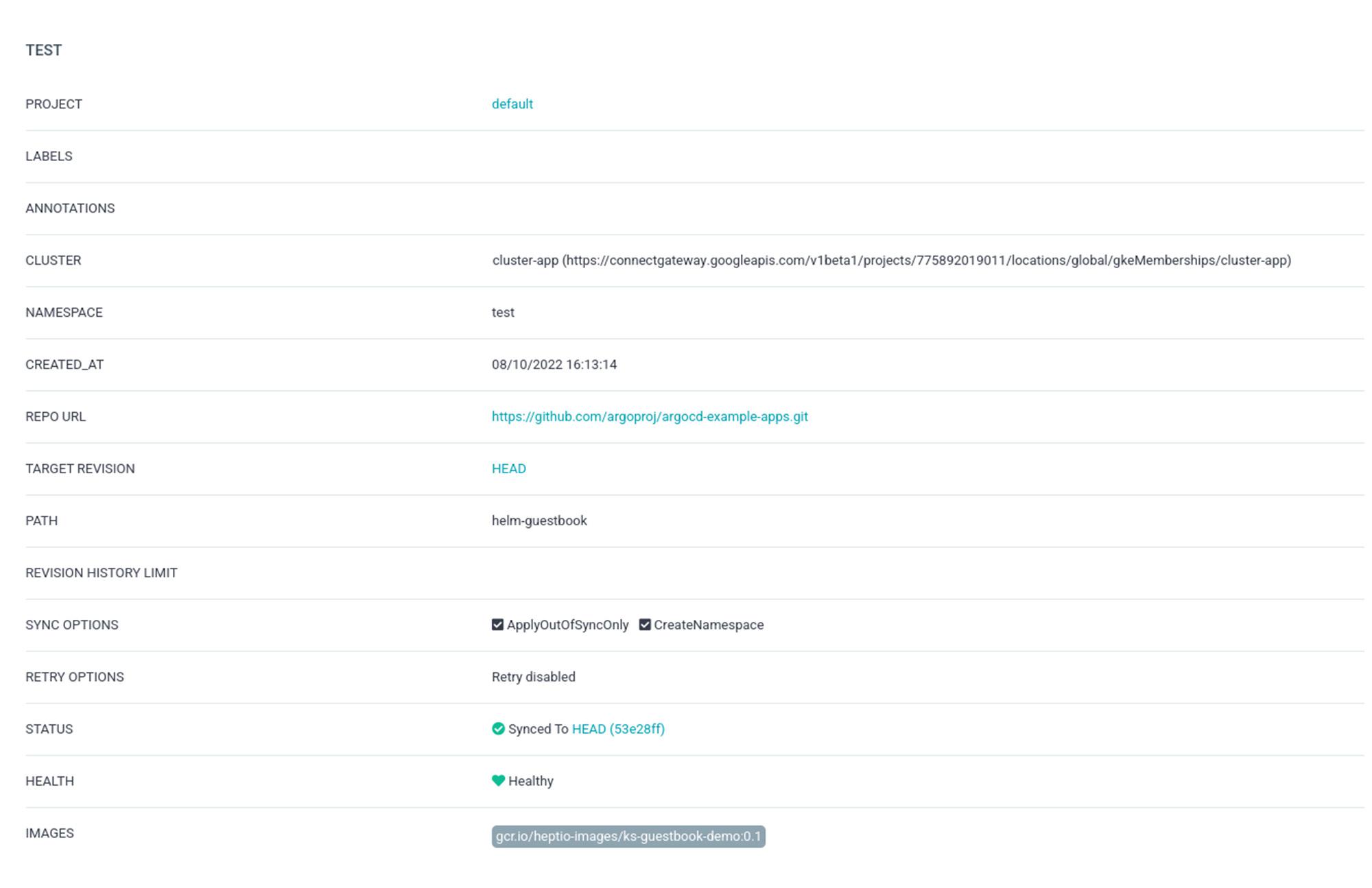

kubectl get secret -n argocd argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d3. Push a test application from ArgoCD and confirm that the application cluster is synced successfully. See repository here for example applications you can deploy for a quick test.

Closing Thoughts

The above steps demonstrate how to enable continuous deployment to distributed Kubernetes platforms using Connect Gateway and Workload Identity. This centralized architecture helps simplify and secure your configuration and application delivery pipelines, and promotes consistency across your fleet.

If you would like to step through a proof of concept that focuses on how to use ArgoCD and Argo Rollouts to automate the state of a Fleet of GKE clusters, please check out this post where you can construct key operator user stories.

- Add a new application cluster to the Fleet with zero touch beyond deploying the cluster and giving it a particular label. The new cluster should install a baseline set of configurations for tooling and security along with any applications that align with the clusters label.

- Add a new application to the Fleet that inherits baseline multi-tenant configurations for the dev team that delivers the application and binds Kubernetes RBAC to that team’s Identity Group.

- Progressively rollout a new version of an application across groups, or waves, of clusters with a manual gate in between each wave.

By: Matt Williams (Customer Engineer, Infrastructure) and Xuebin Zhang (Software Engineer, Kubernetes)

Source: Google Cloud Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!