Increasingly more enterprises adopt Machine Learning (ML) capabilities to enhance their services, products, and operations. As their ML capabilities mature, they build centralized ML Platforms to serve many teams and users across their organization. Machine learning is inherently an experimental process requiring repeated iterations. An ML Platform standardizes the model development and deployment workflow to offer greater consistency for the repeated process. This facilitates productivity and reduces time from prototype to production.

From our partners:

To start building an ML Platform, you should support the basic ML user journey of notebook prototyping to scaled training to online serving. If your organization has multiple teams, you may additionally need to support administrative requirements of multi-user support with identity-based authentication and authorization. Two popular OSS projects – Kubeflow and Ray – together can support these needs. Kubeflow provides the multi-user environment and interactive notebook management. Ray orchestrates distributed computing workloads across the entire ML lifecycle, including training and serving.

Google Kubernetes Engine (GKE) simplifies deploying OSSe ML software in the cloud with autoscaling and auto-provisioning. GKE reduces the effort to deploy and manage the underlying infrastructure at scale and offers the flexibility to use your ML frameworks of choice. In this article, we will show how Kubeflow and Ray can be assembled into a seamless experience. We will demonstrate how platform builders can deploy them both to GKE to provide a comprehensive, production-ready ML platform.

Kubeflow and Ray

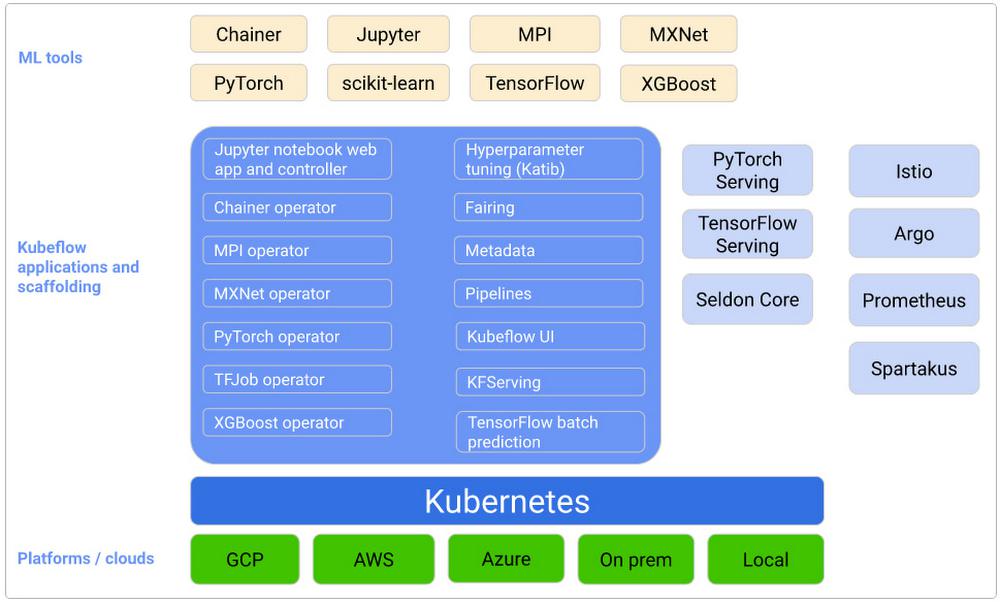

First, let’s take a closer look at these two OSS projects. While both Kubeflow and Ray deal with the problem of enabling ML at scale, they focus on very different aspects of the puzzle.

Kubeflow is a Kubernetes-native ML platform aimed at simplifying the build-train-deploy lifecycle of ML models. As such, its focus is on general MLOps. Some of the unique features offered by Kubeflow include:

- Built-in integration with Jupyter notebooks for prototyping

- Multi-user isolation support

- Workflow orchestration with Kubeflow Pipelines

- Identity-based authentication and authorization through Istio Integration

- Out-of-the-box integration with major cloud providers such as GCP, Azure, and AWS

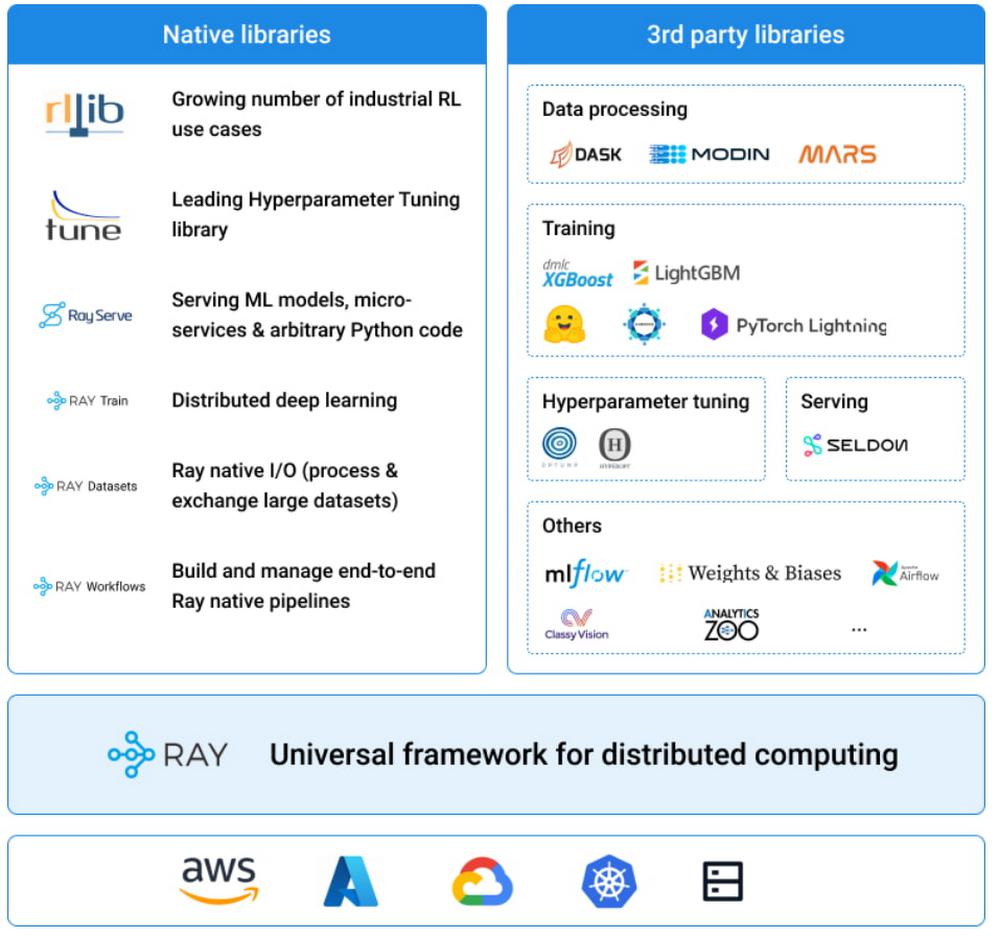

- RLLib for reinforcement learning

- Ray Tune for hyperparameter tuning

- Ray Train for distributed deep learning

- Ray Serve for scalable model serving

- Ray Data for preprocessing

Source: https://docs.ray.io/en/latest/index.html#what-is-ray

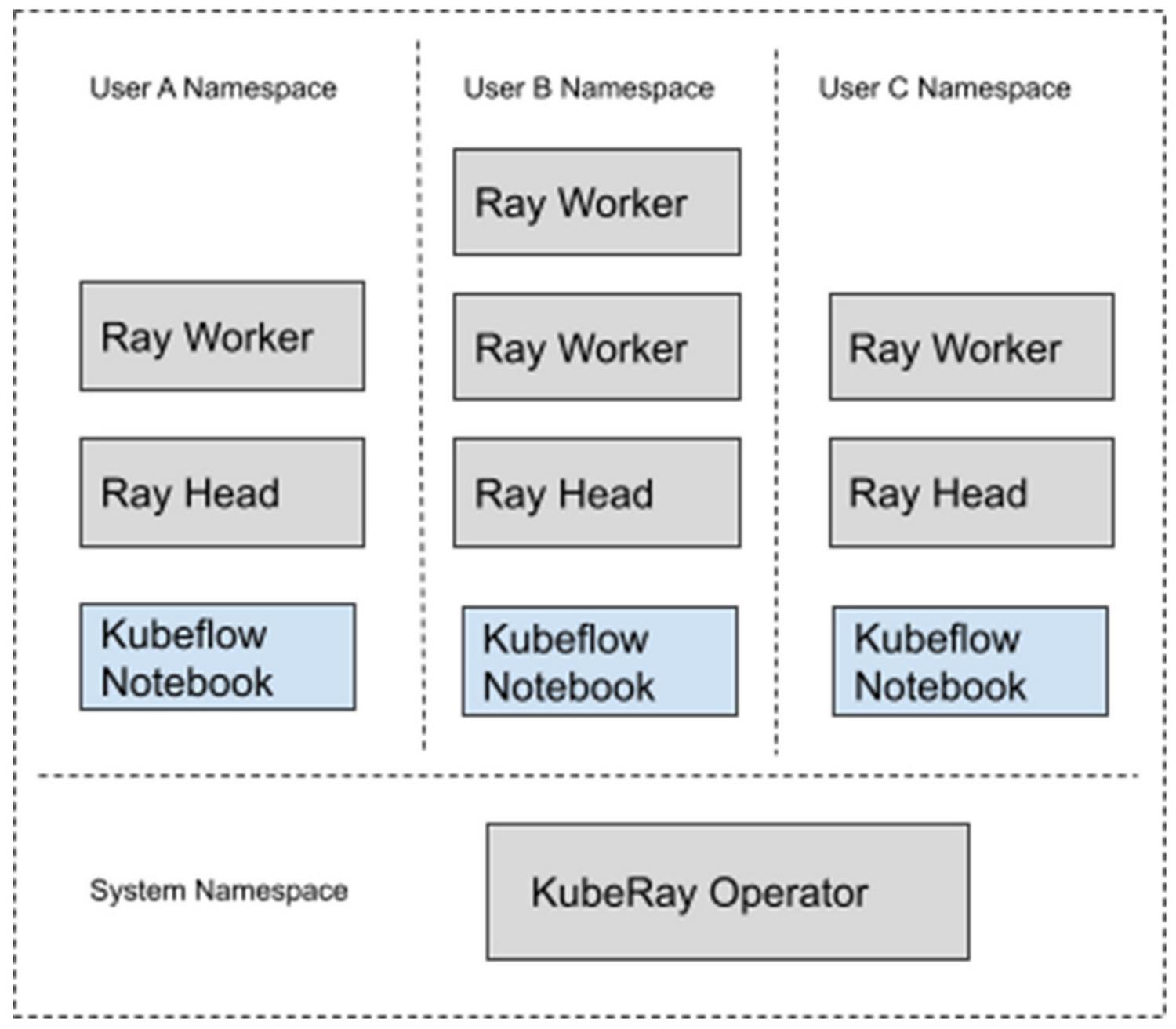

- Supports Ray Train with autoscaling and resource provisioning

- Integrated with identity-based authentication and authorization

- Supports multi-user isolation and collaboration

- Contains an interactive notebook server

Let’s now take a look at how we can put these two platforms together and take advantage of the useful features offered by each. Specifically, we will deploy Kuberay in a GKE cluster installed with Kubeflow. The system looks something like this:

- Google Kubernetes Engine 1.21.12-gke.2200

- Kubeflow 1.5.0

- Kuberay 0.3.0

- Python 3.7

- Ray 1.13.1

The configuration files used for this deployment can be found here.

Deploying Kubeflow and Kuberay

For deploying Kubeflow, we will be using the GCP instructions here. For simplicity purposes, I have used mostly default configuration settings. You can freely experiment with customizations before deploying, for example, you can enable GPU nodes in your cluster by following these instructions.

Deploying the KubeRay operator is pretty straightforward. We will be using the latest released version:

export KUBERAY_VERSION=v0.3.0

kubectl create -k "github.com/ray-project/kuberay/manifests/cluster-scope-resources?ref=${KUBERAY_VERSION}"

kubectl apply -k "github.com/ray-project/kuberay/manifests/base?ref=${KUBERAY_VERSION}"

Creating Your Kubeflow User Profile

Before you can deploy and use resources in Kubeflow, you need to first create your user profile. If you follow the GKE installation instructions, you should be able to navigate to https://[cluster].endpoints.[project].cloud.goog/ in your browser, where [cluster] is the name of your GKE cluster and [project] is your GCP project name.

This should redirect you to a web page where you can use your GCP credentials to authenticate yourself.

Build the Ray Worker Image

Next, let’s build the image we’ll be using for the Ray cluster. Ray is very sensitive when it comes to version compatibility (for example, the head and worker nodes must use the same versions of Ray and Python), so it is highly recommended to prepare and version-control your own worker images. Look for the base image you want from their Docker page here: rayproject/ray – Docker Image.

The following is a functioning worker image using Ray 1.13 and Python 3.7:

FROM rayproject/ray:1.13.1-py37

RUN pip install numpy tensorflow

CMD ["bin/bash"]

FROM rayproject/ray:1.13.1-py37-gpu

RUN pip install numpy tensorflow

CMD ["bin/bash"]

$ docker build -t <path-to-your-image> -f Dockerfile .

$ docker push <path-to-your-image>

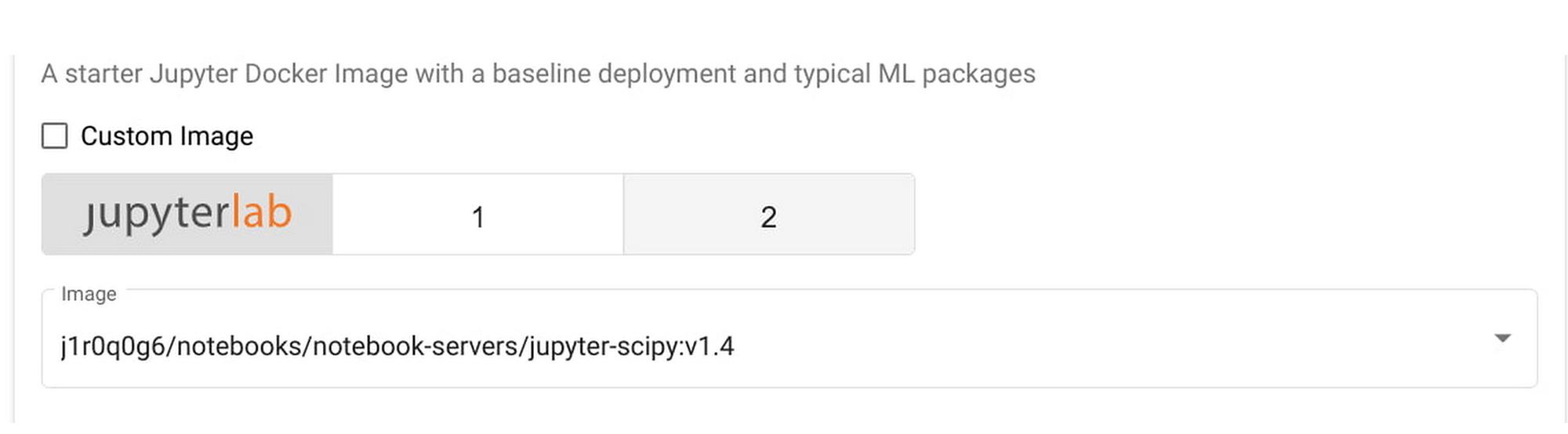

Build the Jupyter Notebook Image

Similarly we need to build the notebook image that we are going to use. Because we are going to use this notebook to interact with the Ray cluster, we need to ensure that it uses the same version of Ray and Python as the Ray workers.

The Kubeflow example Jupyter notebooks can be found at Example Notebook Servers. For this example, I changed the PYTHON_VERSION in components/example-notebook-servers/jupyter/Dockerfile to the following:

ARG MINIFORGE_VERSION=4.10.1-4

ARG PIP_VERSION=21.1.2

ARG PYTHON_VERSION=3.7.10

$ docker build -t <path-to-your-image> -f Dockerfile .

$ docker push <path-to-your-image>

Deploy a Ray Cluster

Now we are ready to configure and deploy our Ray cluster.

1. Copy the following sample yaml file from GitHub:

curl https://github.com/richardsliu/ray-on-gke/blob/main/manifests/ray-cluster.serve.yaml -o ray-cluster.serve.yaml

namespace: %your_name%

image: %your_image%

resources:

limits:

cpu: 1

requests:

cpu: 200m

kubectl apply -f raycluster.serve.yaml

$ kubectl get pods -n <user name>

NAME READY STATUS RESTARTS AGE

example-cluster-head-8cbwb 1/1 Running 0 12s

example-cluster-worker-large-group-75lsr 1/1 Running 0 12s

example-cluster-worker-large-group-jqvtp 1/1 Running 0 11s

example-cluster-worker-large-group-t7t4n 1/1 Running 0 12s

$ kubectl get services -n <user name>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

example-cluster-head-svc ClusterIP 10.52.9.88 <none> 8265/TCP,10001/TCP,8000/TCP,6379/TCP 18s

Training a ML Model

We are going to use a Notebook to orchestrate our model training. We can access Ray from a Jupyter notebook session.

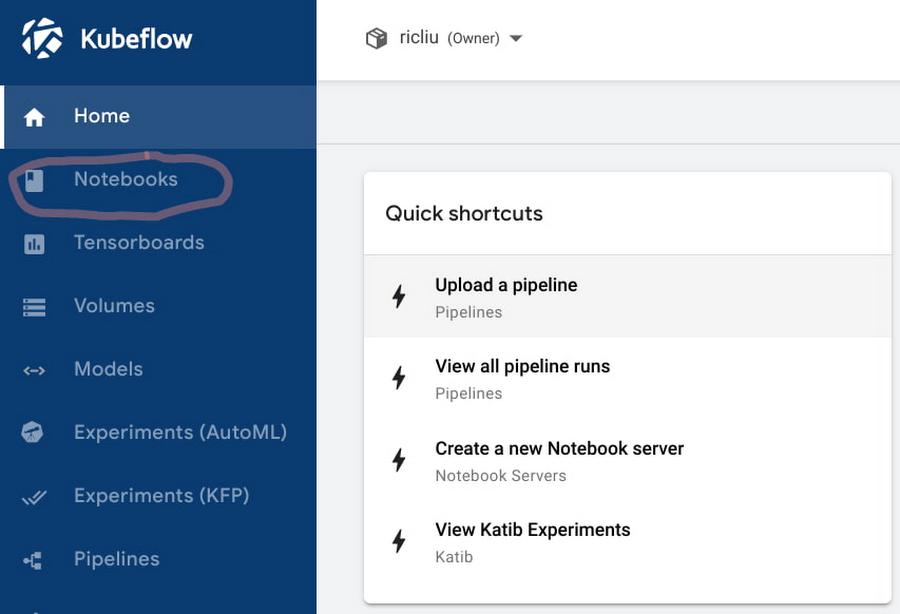

1. In the Kubeflow dashboard, navigate to the “Notebooks” tab.

pip install ray==1.13

ray.init("ray://example-cluster-head-svc:10001")

trainer = Trainer(backend="tensorflow", num_workers=4)

trainer.start()

results = trainer.run(train_func_distributed)

trainer.shutdown()

Serving a ML Model

In this section we will look at how we can serve the machine learning model that we have just trained in the last section.

1. Using the same notebook, wait for the training steps to complete. You should see some output logs with metrics for the model that we have trained.

2 Run the next cell:

serve.start(detached=True, http_options={"host": "0.0.0.0"})

TFMnistModel.deploy(TRAINED_MODEL_PATH)

resp = requests.get(

"http://example-cluster-head-svc:8000/mnist",

json={"array": np.random.randn(28 * 28).tolist()})

Sharing the Ray Cluster with Others

Now that you have a functional workspace with an interactive notebook and a Ray cluster, let’s invite others to collaborate.

1. On Cloud Console, grant the user minimal cluster access here.

2. In the left-hand panel of the Kubeflow dashboard, select “Manage Contributors”.

3. In the “Contributors to your namespace” section, enter the email address of the user to whom you are granting access. Press enter.

4. That user can now select your namespace and access your notebooks, including your Ray cluster.

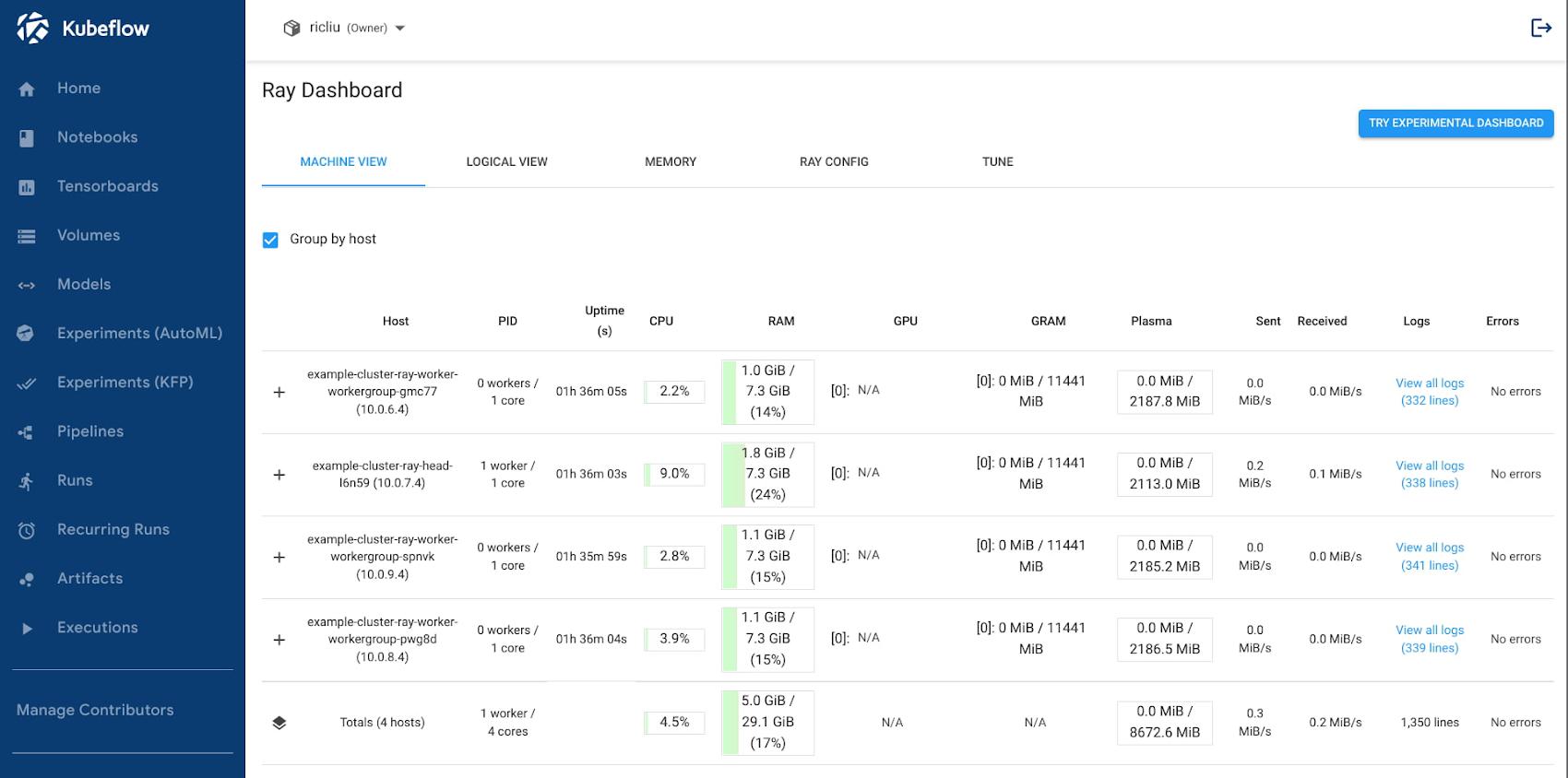

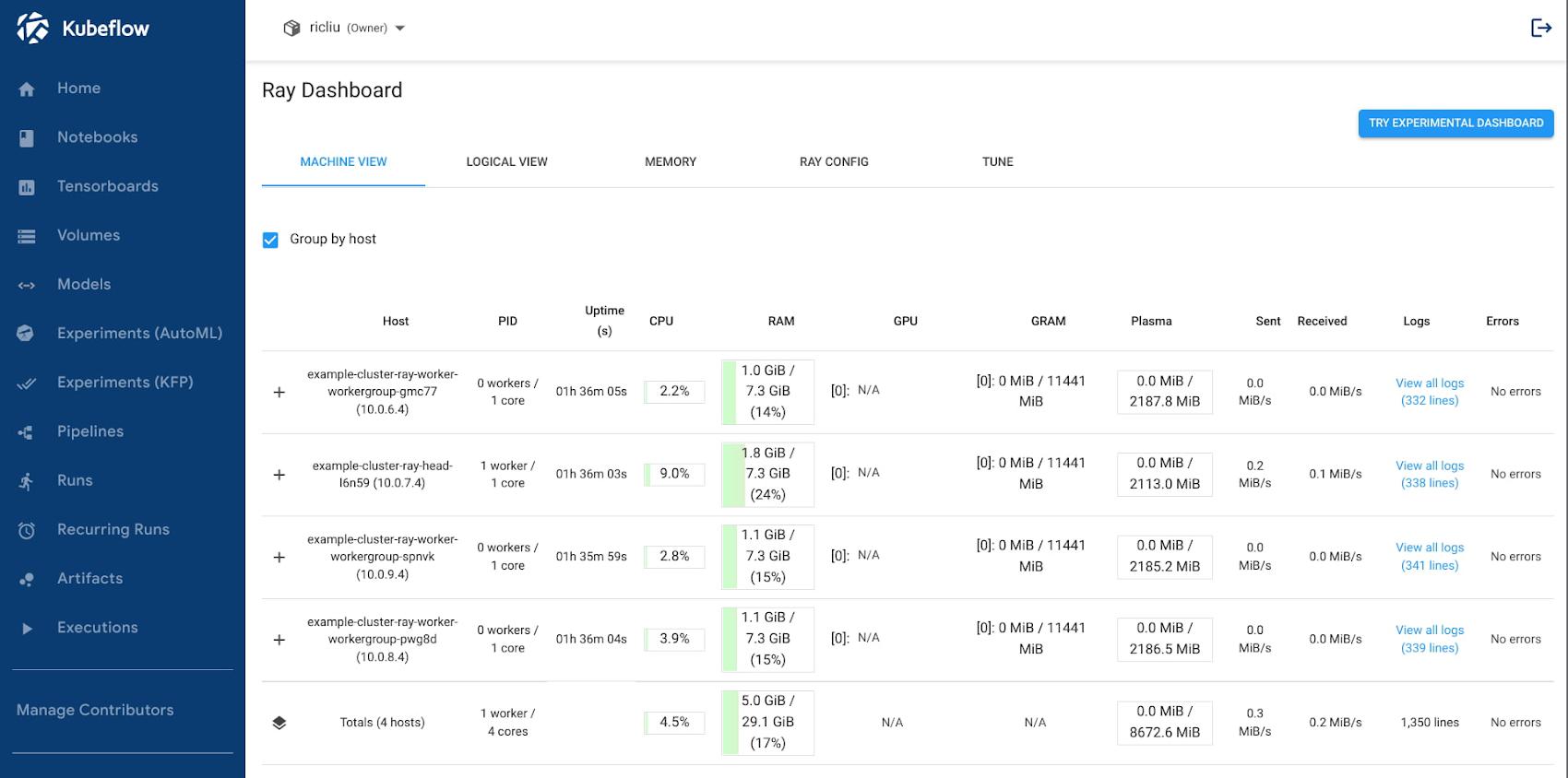

Using Ray Dashboard

Finally, you can also bring up the Ray Dashboard using Istio virtual services. Using these steps, you can bring up a dashboard UI inside the Kubeflow central dashboard console:

1. Create an Istio Virtual Service config file:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: example-cluster-virtual-service

Namespace: kubeflow

spec:

gateways:

- kubeflow-gateway

hosts:

- '*'

http:

- match:

- uri:

prefix: /example-cluster/

rewrite:

uri: /

route:

- destination:

host: example-cluster-head-svc.$(USER_NAMESPACE).svc.local

port:

number: 8265

kubectl apply -f virtual_service.yaml

https://<host>/_/example-cluster/. The Ray dashboard should be displayed in the window:

Conclusion

Let’s take a minute to recap what we have done. In this article, we have demonstrated how to deploy two popular ML frameworks, Kubeflow and Ray, in the same GCP Kubernetes cluster. The setup also takes advantage of GCP features like IAP (Identity-Aware Proxy) for user authentication, which protects your applications while simplifying the experience for cloud admins. The end result is a well-integrated and production-ready system that pulls in useful features offered by each system:

- Orchestrating distributed computing workloads using Ray APIs;

- Multi-user isolation using Kubeflow;

- Interactive notebook environment using Kubeflow notebooks;

- Cluster autoscaling and auto-provisioning using Google Kubernetes Engine

We’ve only scratched the surface of the possibilities, and you can expand from here:

- Integrations with other MLOps offerings, such as Vertex Model monitoring;

- Faster and safer image storage and management, through the Artifact Repository;

- High throughput storage for unstructured data using GCSFuse;

- Improve network throughput for collective communication with NCCL Fast Socket.

We look forward to the growth of your ML Platform and how your team innovates with Machine Learning. Look out for future articles on how to enable additional ML Platform features.

By: Richard Liu (Senior Software Engineer, Google Kubernetes Engine) and Winston Chiang (Product Manager, Google Kubernetes Engine AI/ML)

Source: Google Cloud Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!