Kubernetes users usually share clusters to meet the demands of multiple teams and multiple customers, which is usually described using the term multi-tenancy. Multi-tenancy saves costs and simplifies administration. While Kubernetes does not have first-class concepts of end users or tenants, it provides several features to help manage different tenancy requirements. Based on these features, the Kubernetes community has seen an emergence of projects for multi-tenancy.

In this article, we will discuss issues around multi-tenancy in Kubernetes. In particular, we will thoroughly examine the two implementations of multi-tenancy – cluster sharing and multi-cluster – and provide suggestions for enterprises caught in a bind.

From our partners:

Control Plane Isolation

Kubernetes offers three mechanisms to achieve control plane isolation, i.e. namespace, RBAC and quota.

As a common concept to almost every Kubernetes user, namespace is used to provide a separate naming space. Object names within a namespace can be the same as names in other namespaces. Furthermore, RBAC and quota are also scoped to namespaces.

Role-based access control (RBAC) is commonly used to enforce authorization in the Kubernetes control plane, for both users and workloads (service accounts). Isolated access to API resources can be achieved by setting appropriate RBAC rules.

ResourceQuota can be used to impose a ceiling on the resource usage of namespaces. This ensures that a tenant cannot monopolize a cluster’s resources or overwhelm its control plane hence minimizing the “noisy neighbor” issue. One restriction of using ResourceQuota is that when applying a quota to a namespace, Kubernetes requires users to also specify resource requests and limits for each container in that namespace.

While these three mechanisms can, to some extent, achieve control plane isolation, they cannot solve the problem once and for all. Cluster-wide resources, such as CRDs, cannot be well isolated with these mechanisms.

Data Plane Isolation

Data plane isolation generally concerns three aspects: container runtime, storage, and network.

As kernels are shared by containers and hosts, unpatched vulnerabilities in the application and system layers can be exploited by attackers for container breakouts and remote code execution that allow access to host resources or other containers. This can be resolved by running containers in isolated environments, such as VMs (e.g., Kata Containers) and user-mode kernels (e.g., gVisor).

Storage isolation should ensure that volumes are not accessed across tenants. Since StorageClass is a cluster-wide resource, reclaimPolicy should be specified as Delete to prevent PVs from being accessed across tenants. In addition, the use of volumes such as hostPath should also be prohibited to avoid the abuse of the nodes’ local storage.

Network isolation is usually achieved through setting NetworkPolicy. By default, all pods can communicate with each other. With NetworkPolicy, the network flow between pods can be restricted, reducing unexpected network access. Advanced network isolation can be achieved through the service mesh.

Implementation of Multi-Tenancy

The abovementioned control plane isolation and data plane isolation are just individual features provided by Kubernetes. There’s still a long way to go before these features can be delivered as an integrated multi-tenancy solution. Fortunately, the Kubernetes community has a number of open-source projects specifically addressing multi-tenancy issues. These projects fall into two categories, with one dividing tenants by namespaces, while the other providing a virtual control plane to tenants.

Namespace Per Tenant

In Kubernetes’ control plane isolation, both RBAC and ResourceQuota are bounded by namespaces. While it seems to be easy to divide tenants by namespaces, the prescription of one namespace per tenant has significant limitations. For example, subdividing tenants in a granular level of team and application can hardly be accomplished, which increases the management difficulty. In view of this, Kubernetes has officially provided a controller that supports hierarchical namespace. Third-party open-source projects such as Capsule and kiosk also provide more sophisticated multi-tenant support.

Virtual Control Plane

Another option is to provide each tenant with a separate virtual control plane so as to completely isolate the tenant’s resources. A virtual control plane is typically implemented by running a separate set of apiservers for each tenant, while using the controller to synchronize resources from the tenant apiservers to the original Kubernetes cluster. Each tenant can only access its corresponding apiserver, while the apiservers of the original Kubernetes cluster are typically not externally accessible.

Virtual control planes usually require a higher resource consumption for the radical isolation of control planes. But in conjunction with data plane isolation techniques, it’s a more thorough and secure multi-tenant solution. One example of this is the vcluster project.

Comparison of Two Options

Whether to use “namespace per tenant” or the virtual control plane to achieve multi-tenancy basically depends on application scenarios. Generally speaking, dividing tenants by namespace reduces isolation and flexibility while being appropriate for lightweight scenarios such as sharing clusters between multiple teams. Whereas in the scenario of multiple customers, a virtual control plane usually can provide more secure isolation.

Multi-Cluster Solutions

As we can see from the above discussion, the sharing of Kubernetes clusters is not an easy task; multi-tenancy is not a built-in feature of Kubernetes and can only be implemented with other projects’ support in tenant isolation on both control and data planes. This has resulted in considerable learning and adaptation costs for the entire solution. As a result, we are seeing an increasing number of users adopt multi-cluster solutions instead.

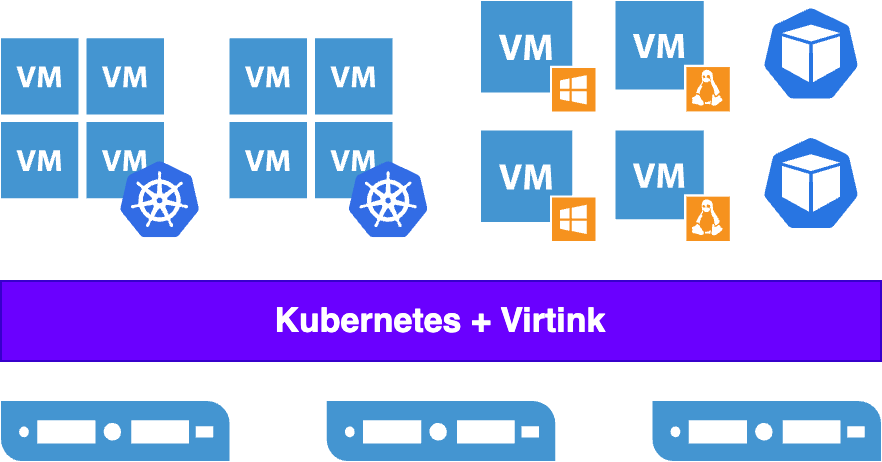

Compared with cluster-sharing solutions, multi-cluster solutions have both pros and cons. The advantages include the high level of isolation and clear boundaries, whereas the disadvantages lie in the high overhead and O&M costs. Since each cluster requires individual control planes and worker nodes, Kubernetes clusters are usually built on VMs to increase the utilization of physical clusters. However, traditional virtualization products tend to be large and heavy as they are expected to deal with a wide range of scenarios. This makes them too expensive to be the best choice of supporting virtualized Kubernetes clusters.

Based on that, we believe that an ideal virtualization platform for virtualized Kubernetes clusters should have the following characteristics:

- Lightweight. Instead of addressing all scenarios (e.g., VDI), it should focus on server virtualization while eliminating all unnecessary features and overheads.

- Efficient. Widely use paravirtualization I/O technologies such as virtio to increase efficiency.

- Secure. Minimize the host’s attack surface.

- Kubernetes-native. The virtualization platform should itself be a Kubernetes cluster, which reduces learning and O&M costs.

And these characteristics are exactly what the Virtink virtualization engine has. Virtink is an open-source and lightweight virtualization add-on for Kubernetes released by SmartX. Based on the Cloud Hypervisor project, Virtink is capable of orchestrating lightweight, modern VMs on Kubernetes.

Virtink uses virtio as much as possible to improve I/O efficiency. In terms of security, memory management is more secure with the Cloud Hypervisor project written in the Rust language. Also, legacy and unnecessary hardware is not supported so as to minimize the attack surface exposed to VMs and improve host security.

Overall, Virtink is more lightweight, efficient, and secure, providing more ideal virtualization support for Kubernetes in Kubernetes while lowering the overhead of the virtualization layer.

Moreover, in order to meet the needs of creating, operating, and maintaining virtualized Kubernetes clusters on Virtink, we developed the knest command line tool to help users create and operate clusters with one click. Particularly, to create a virtualized Kubernetes cluster on the Virtink cluster, users could simply run knest create <cluster-name>. One-click-scale-out is also supported.

Conclusion

Although Kubernetes has no built-in multi-tenancy, it provides some fine-grained feature support. Multi-tenant cluster sharing can be achieved through these features and some third-party tools, which yet entails additional learning and O&M costs. From this perspective, the multi-cluster solution is more suitable and has been adopted by many users. However, users may encounter other problems such as traditional virtualization platforms’ low efficiency and high cost.

Built on an efficient and secure Cloud Hypervisor project, Virtink, an open-source virtualization engine, is optimized in orchestrating lightweight VMs on Kubernetes. We also provide a knest command line tool which allows users to create and manage clusters with a simple click, reducing the overall O&M cost of multiple clusters.

To learn more about the Virtink project, features and demo, read the previous blog post here.

Guest post by Feng Ye, Software Engineering Manager & Virtink Project Maintainer at SmartX

Source CNCF

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!