The need to regulate online privacy is a truth so universally acknowledged that even Facebook and Google have joined the chorus of voices crying for change.

Writing in the New York Times, Google CEO Sundar Pichai argued that it is “vital for companies to give people clear, individual choices around how their data is used.” Like all Times opinion pieces, his editorial included multiple Google tracking scripts served without the reader’s knowledge or consent. Had he wanted to, Mr. Pichai could have learned down to the second when a particular reader had read his assurance that Google “stayed focused on the products and features that make privacy a reality.”

From our partners:

Writing in a similar vein in the Washington Post this March, Facebook CEO Mark Zuckerberg called for Congress to pass privacy laws modeled on the European General Data Protection Regulation (GDPR). That editorial was served to readers with a similar bouquet of non-consensual tracking scripts that violated both the letter and spirit of the law Mr. Zuckerberg wants Congress to enact.

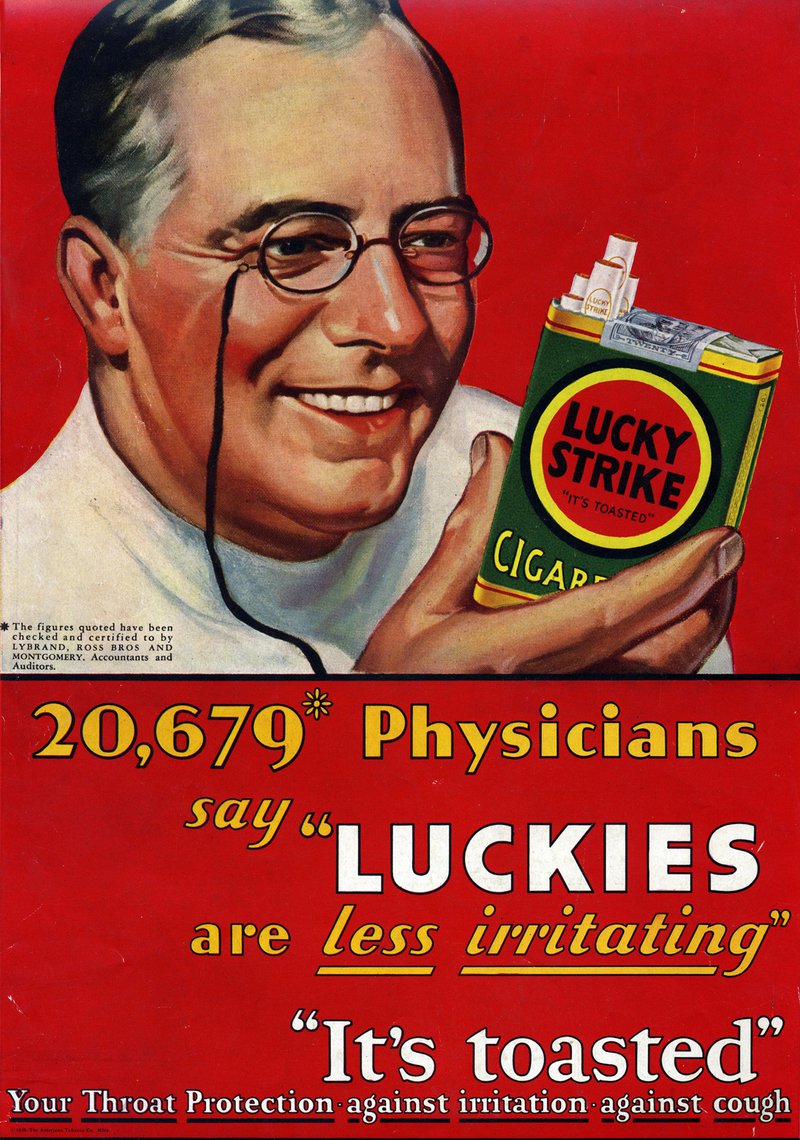

This odd situation recalls the cigarette ads in the 1930’s in which tobacco companies brought out rival doctors to argue over which brand was most soothing to the throat.

No two companies have done more to drag private life into the algorithmic eye than Google and Facebook. Together, they operate the world’s most sophisticated dragnet surveillance operation, a duopoly that rakes in nearly two thirds of the money spent on online ads. You’ll find their tracking scripts on nearly every web page you visit. They can no more function without surveillance than Exxon Mobil could function without pumping oil from the ground.

So why have the gravediggers of online privacy suddenly grown so worried about the health of the patient?

Part of the answer is a defect in the language we use to talk about privacy. That language, especially as it is codified in law, is not adequate for the new reality of ubiquitous, mechanized surveillance.

In the eyes of regulators, privacy still means what it did in the eighteenth century—protecting specific categories of personal data, or communications between individuals, from unauthorized disclosure. Third parties that are given access to our personal data have a duty to protect it, and to the extent that they discharge this duty, they are respecting our privacy.

Seen in this light, the giant tech companies can make a credible claim to be the defenders of privacy, just like a dragon can truthfully boast that it is good at protecting its hoard of gold. Nobody spends more money securing user data, or does it more effectively, than Facebook and Google.

The question we need to ask is not whether our data is safe, but why there is suddenly so much of it that needs protecting. The problem with the dragon, after all, is not its stockpile stewardship, but its appetite.

This requires us to talk about a different kind of privacy, one that we haven’t needed to give a name to before. For the purposes of this essay, I’ll call it ‘ambient privacy’—the understanding that there is value in having our everyday interactions with one another remain outside the reach of monitoring, and that the small details of our daily lives should pass by unremembered. What we do at home, work, church, school, or in our leisure time does not belong in a permanent record. Not every conversation needs to be a deposition.

Until recently, ambient privacy was a simple fact of life. Recording something for posterity required making special arrangements, and most of our shared experience of the past was filtered through the attenuating haze of human memory. Even police states like East Germany, where one in seven citizens was an informer, were not able to keep tabs on their entire population. Today computers have given us that power. Authoritarian states like China and Saudi Arabia are using this newfound capacity as a tool of social control. Here in the United States, we’re using it to show ads. But the infrastructure of total surveillance is everywhere the same, and everywhere being deployed at scale.

Ambient privacy is not a property of people, or of their data, but of the world around us. Just like you can’t drop out of the oil economy by refusing to drive a car, you can’t opt out of the surveillance economy by forswearing technology (and for many people, that choice is not an option). While there may be worthy reasons to take your life off the grid, the infrastructure will go up around you whether you use it or not.

Because our laws frame privacy as an individual right, we don’t have a mechanism for deciding whether we want to live in a surveillance society. Congress has remained silent on the matter, with both parties content to watch Silicon Valley make up its own rules. The large tech companies point to our willing use of their services as proof that people don’t really care about their privacy. But this is like arguing that inmates are happy to be in jail because they use the prison library. Confronted with the reality of a monitored world, people make the rational decision to make the best of it.

That is not consent.

Ambient privacy is particularly hard to protect where it extends into social and public spaces outside the reach of privacy law. If I’m subjected to facial recognition at the airport, or tagged on social media at a little league game, or my public library installs an always-on Alexa microphone, no one is violating my legal rights. But a portion of my life has been brought under the magnifying glass of software. Even if the data harvested from me is anonymized in strict conformity with the most fashionable data protection laws, I’ve lost something by the fact of being monitored.

One can argue that ambient privacy is a relic of an older world, just like the ability to see the stars in the night sky was a pleasant but inessential feature of the world before electricity. This is the argument Mr. Zuckerberg made when he unilaterally removed privacy protections from every Facebook account back in 2010. Social norms had changed, he explained at the time, and Facebook was changing with them. Presumably now they have changed back.

My own suspicion is that ambient privacy plays an important role in civic life. When all discussion takes place under the eye of software, in a for-profit medium working to shape the participants’ behavior, it may not be possible to create the consensus and shared sense of reality that is a prerequisite for self-government. If that is true, then the move away from ambient privacy will be an irreversible change, because it will remove our ability to function as a democracy.

All of this leads me to see a parallel between privacy law and environmental law, ① another area where a technological shift forced us to protect a dwindling resource that earlier generations could take for granted.

The idea of passing laws to protect the natural world was not one that came naturally to early Americans. In their experience, the wilderness was something that hungry bears came out of, not an endangered resource that required lawyers to defend. Our mastery over nature was the very measure of our civilization.

But as the balance of power between humans and nature shifted, it became clear that wild spaces could not survive without some kind of protection. In 1864 President Lincoln established the first national park, in the Yosemite Valley. In 1902, the European states signed the first environmental treaty, the Convention for the Protection of Birds Useful To Agriculture, which proscribed certain kinds of hunting technology. In 1916, the National Park Service was established, systematizing the role of the Federal government in conserving public land. In 1964, the Wilderness Act established the principle that some spaces should remain substantially free of human activity. And in 1970, Richard Nixon elevated Mother Nature to cabinet rank by creating the Environmental Protection Agency.

In the span of a little more than a century, we went from treating nature as an inexhaustible resource, to defending it piecemeal, to our current recognition that human activity poses an ecological threat to the planet.

While people argue over the balance to strike between environmental preservation and economic activity, no one now denies that this tradeoff exists—that some technologies and ways of earning money must remain off limits because they are simply too harmful.

This regulatory project has been so successful in the First World that we risk forgetting what life was like before it. Choking smog of the kind that today kills thousands in Jakarta and Delhi was once emblematic of London. The Cuyahoga River in Ohio used to reliably catch fire. In a particularly horrific example of unforeseen consequences, tetraethyl lead added to gasoline raised violent crime rates worldwide for fifty years.

None of these harms could have been fixed by telling people to vote with their wallet, or carefully review the environmental policies of every company they gave their business to, or to stop using the technologies in question. It took coordinated, and sometimes highly technical, regulation across jurisdictional boundaries to fix them. In some cases, like the ban on commercial refrigerants that depleted the ozone layer, that regulation required a worldwide consensus.

We’re at the point where we need a similar shift in perspective in our privacy law. The infrastructure of mass surveillance is too complex, and the tech oligopoly too powerful, to make it meaningful to talk about individual consent. Even experts don’t have a full picture of the surveillance economy, in part because its beneficiaries are so secretive, and in part because the whole system is in flux. Telling people that they own their data, and should decide what to do with it, is just another way of disempowering them.

Our discourse around privacy needs to expand to address foundational questions about the role of automation: To what extent is living in a surveillance-saturated world compatible with pluralism and democracy? What are the consequences of raising a generation of children whose every action feeds into a corporate database? What does it mean to be manipulated from an early age by machine learning algorithms that adaptively learn to shape our behavior?

That is not the conversation Facebook or Google want us to have. Their totalizing vision is of a world with no ambient privacy and strong data protections, dominated by the few companies that can manage to hoard information at a planetary scale. They correctly see the new round of privacy laws as a weapon to deploy against smaller rivals, further consolidating their control over the algorithmic panopticon.

Facebook’s early motto was “move fast and break things” (the ghost of that motto lives on in motivational posters on Facebook’s campus). This was a rare bit of honesty in an industry that is otherwise addicted to utopian thinking. We are now twenty years into an uncontrolled social experiment, run by Silicon Valley, that has broken a great deal for the benefit of a few. While we can’t replace the leaders of this failed experiment—they have set themseves up as autocrats for life—there is no reason we should keep listening to them.

I believe Mr. Pichai and Mr. Zuckerberg are sincere in their personal commitment to privacy, just as I am sure that the CEOs of Exxon Mobil and Shell don’t want their children to live in a world of runaway global warming. But their core business activities are not compatible with their professed values. No amount of eloquence can reconcile the things they say with the things their companies do. If the business model of universal surveillance cannot change, then the world around us will change. That decision is one that belongs to all of us, while we still have the ability to make it.

Republished from openDemocracy.

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!