Predictive analytics has long been a source of strategic advantage for innovative companies. High-performing firms use strong predictive analytics to predict future outcomes more accurately, plan for unknown events, and discover product and customer opportunities.

One challenge with predictive analytics is producing the historical data to power the predictions. Some of the most important questions – like how COVID-19 will affect supply chains, or the effect of new technologies – often have little data to draw from, which makes it difficult to train machine learning models.

From our partners:

Today, predictive analytics customers fill the gap by relying on the judgment of their employees, partners, and vendors. So Google asked: how can we do this more rigorously? What if our collective wisdom could be combined with machine learning in a predictive model?

In 2020, facing the uncertainty of the pandemic, a group of Googlers launched an internal prediction market on Google Cloud to try this. Google first ran a prediction market from 2005 to 2007 that showed promise, and today’s team had two advantages we didn’t have in 2005: many more people at Google to participate, and the ability to build the platform on Google Cloud.

After iterating on the structure and design, and analyzing over 175,000 predictions from over 10,000 Google employees, we’d like to share the design patterns and technologies that helped to generate accurate forecasts on challenging domains like COVID-19, engineering milestones, and emerging technology trends.

How to Structure a Prediction Market

The core insight behind prediction markets is to incentivize the right people to forecast accurately, thereby producing a consensus forecast that is more accurate than any individual.

For example, let’s say you’re trying to predict: will interest rates rise in 2022? You may have ML models to forecast customer behavior and even predict macroeconomic trends, but anticipating events like this requires drawing on knowledge and judgments in your organization.

Accurately predicting the future with questions like this can be very difficult. On the one hand, it is tempting to be overconfident on a simple answer; on the other, it is easy to lament that one cannot possibly know. In fact, evidence from prediction markets and forecasting tournaments indicate that, with the right structure, organizations can achieve remarkable accuracy.

From our experience, we have arrived at five key principles behind soliciting accurate forecasts from your organization. Let’s walk through them step by step.

1. Provide a UI that makes it easy for people to express nuanced, precise predictions.

Most questions can be posed as forecasting a probability (as in the below example), date (“when will interest rates rise?”), or number (“what will the interest rate be next year?”). For each type, we give our participants two options: (a) with one click, predict that the probability/date/number should be lower or higher than the current consensus; or (b) provide their specific forecast as a range.

For example, consider the following probability market:

We recommend paying particular attention to the precise resolution condition, to ensure that everyone is forecasting the same thing. Precise forecasting can only work on precisely defined questions. Nominate admins from your org to review new question proposals, and ensure the right choice of source data and time frame.

2. Incentivize experts from around your organization to make predictions on questions where they have insights.

Like other prediction markets, our incentive system is similar to an options market. We convert predictions into “bids” and “asks”, interpreting the probabilities, numbers, or dates as “prices” and the confidence as “volume”. The application broker matches opposing bids and asks at the best price. The trade results in a contracts between the parties about the outcome, as shown in the following pseudocode:

def process_bid(event, bid):"""Trades a bid against the best priced asks."""unfilled_bid_volume = volumefor ask in _get_asks_by_increasing_price(event):# If the next ask price is higher than the bid price, or the bid# is filled, there will be no more trades.if unfilled_bid_volume == 0 or ask.price > bid.price:break# If this ask more than fills the remaining volume, trade the# remainderif ask.volume > unfilled_bid_volume:ask.volume -= unfilled_bid_volume_execute_trade(event, bid, ask)break# Otherwise, trade the full volume of the ask with this bid.unfilled_bid_volume -= ask_volumeask.mark_filled()_execute_trade(event, bid, ask)

In the same way that financial markets discover consensus prices by efficiently matching orders, prediction markets discover consensus forecasts by efficiently matching predictions. This maximizes the incentive for predictors to spot opportunities to correct the market by making a better prediction. When the question is resolved by updates in the real world, for each traded contract, one party will gain, and both parties can learn from the experience.

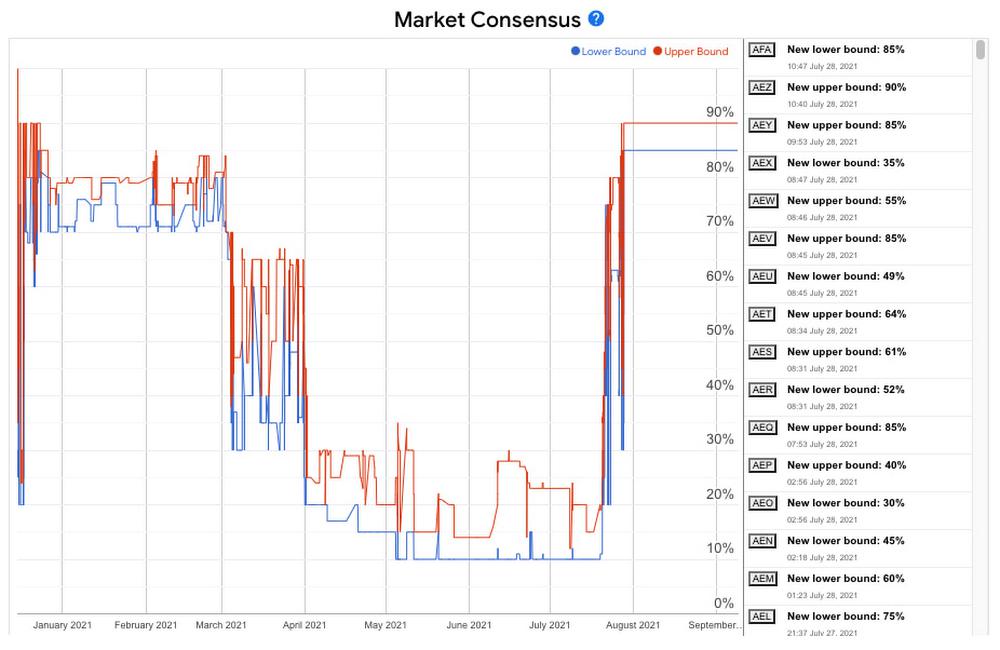

3. Support continuous predicting, so that the consensus forecasts update in real time as new information surfaces.

A particular challenge for predictive analytics is that new information can surface at any time. The stream of predictions, interpreted as bids and asks, represents a consensus of all participants as time passes. By graphing the bid-ask spread – the values for which, at that moment, no party wishes to take the other side – you can see the evolution of this consensus.

4. Give your predictors feedback on their performance, to help them improve their forecast accuracy over time.

Once a question is resolved, in addition to notifying your users of the result, you can contextualize their performance: how well are they doing in that category, or in the current quarter? How do they compare to the top forecasters?

One incentive we offer successful predictors – beyond leaderboards and badges – is the ability to have a bigger influence on the market consensus by increasing the confidence of their predictions. Over time, predictors gravitate towards topics where they predict more accurately, which can also increase the market’s forecast accuracy.

Forecasting is like most other skills we use in the workplace, in that we improve over time. If incentives and feedback are structured correctly, expect improvements in accuracy both at the individual level and in the overall market. One key here is to ask questions over shorter time horizons. Instead of asking “what will our new service adoption rate be next year,” start by predicting the adoption rate next quarter, and iterate.

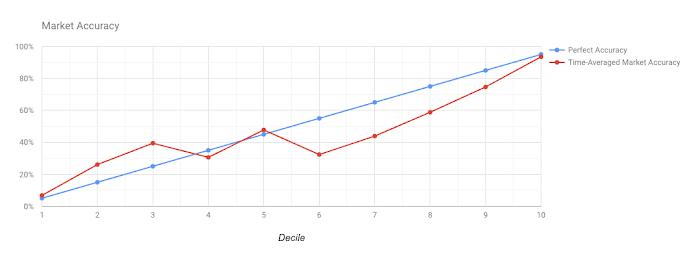

The blue line represents perfect accuracy – i.e, events forecast to be 10% likely actually happen 10% of the time. The red line represents the market consensus forecast. Even though the questions are very challenging, the market is fairly accurate.

5. Synthesize the market forecasts with machine learning

Prediction markets fill the gap when there isn’t sufficient historical data to rely on machine learning alone. Most often, there is some data to inform your strategic decisions, but not enough to determine the right choice. For example, perhaps you have ample time-series data on your consumer spending behavior on one platform, but nothing yet on a new platform you have yet to launch.

There are two ways to synthesize prediction markets and machine learning. First, use the output of the prediction market as input into a larger predictive analytics system that also draws from machine-learned inference on time series data. Alternatively, add machine learning systems as market makers, providing baseline bid-ask spreads and liquidity that increases the incentive for accurate predictions.

Architecting the Platform on Google Cloud

By leveraging Google Cloud Platform (GCP), we were able to build the entire platform with a small team in less than a year and scale to thousands of users. We’d like to share the specific GCP tools we used to prototype, build, and grow a robust platform very quickly:

- Serverless provided the main workhorse for the platform. We use App Engine, and are also experimenting with Cloud Run and Cloud Functions to implement the trading backend.

- Serverless is a great toolkit for building an application before you decide what type of backends you might want to manage yourself on Compute Engine. It handles deployments, versioning, and resource scaling without writing additional code. This lets us stay flexible with the backend architecture.

- Cloud Scheduler and Cloud Tasks complement a serverless architecture well, making it easy to run periodic jobs, like providing badges to our top predictors, and long-running background tasks, like market resolutions and calculating analytics.

- Cloud Firestore served as our application database, running in Datastore mode. As a scalable NoSQL cloud database, it lets you develop your frontend flexibly, updating your schema as you design and build more features. It also supports complex, hierarchical data – in our case, storing bids, asks, and traded positions as children of the market entity makes querying for data fast and easy.

- Using Data Studio, you can dashboard insights from the predictions, for example to visualize trends across markets over time. With Looker, you can aggregate the market predictions with existing predictive analytics to produce better insights than either would produce alone.

- It also supports an easy export to BigQuery for analytics and BigQueryML for Machine Learning, and to refine the human consensus algorithmically. Once you have this data, GCP’s Vertex AI offering can allow you to train forecasting models with little to no code, giving the best synthesis of human and machine reasoning on difficult strategic questions.

- Dataflow enables us to do large batch operations on the data store – such as restoring from a backup – as a managed process.

We also use a variety of basic GCP tools for the operational side of running our prediction market. We used:

- Logs Explorer to debug tricky situations, such as when queries time out or we hit an issue with an internal API

- Cloud Profiler and Cloud Trace tools helped us identify scaling bottlenecks early, such as unnecessary synchronous Cloud Firestore queries that could be done asynchronously.

- Cloud Error Reporting made it easy to configure monitoring for errors, jump to the underlying issues, and determine which errors were more more frequent

Get Started

Ready to take your predictive analytics to the next level and unlock the wisdom of your organization? If you’d like to discuss building a platform like this with us, please provide your information in this form.

By: Dan Schwarz (Senior Software Engineer) and Lindsay Taylor (Key Account Director, Manufacturing)

Source: Google Cloud Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!