When deploying models to the Vertex AI prediction service, each model is by default deployed to its own VM. To make hosting more cost effective, we’re excited to introduce model co-hosting in public preview, which allows you to host multiple models on the same VM, resulting in better utilization of memory and computational resources. The number of models you choose to deploy to the same VM will depend on model sizes and traffic patterns, but this feature is particularly useful for scenarios where you have many deployed models with sparse traffic.

Understanding the Deployment Resource Pool

Co-hosting model support introduces the concept of a Deployment Resource Pool, which groups together models to share resources within a VM. Models can share a VM if they share an endpoint, but also if they are deployed to different endpoints.

From our partners:

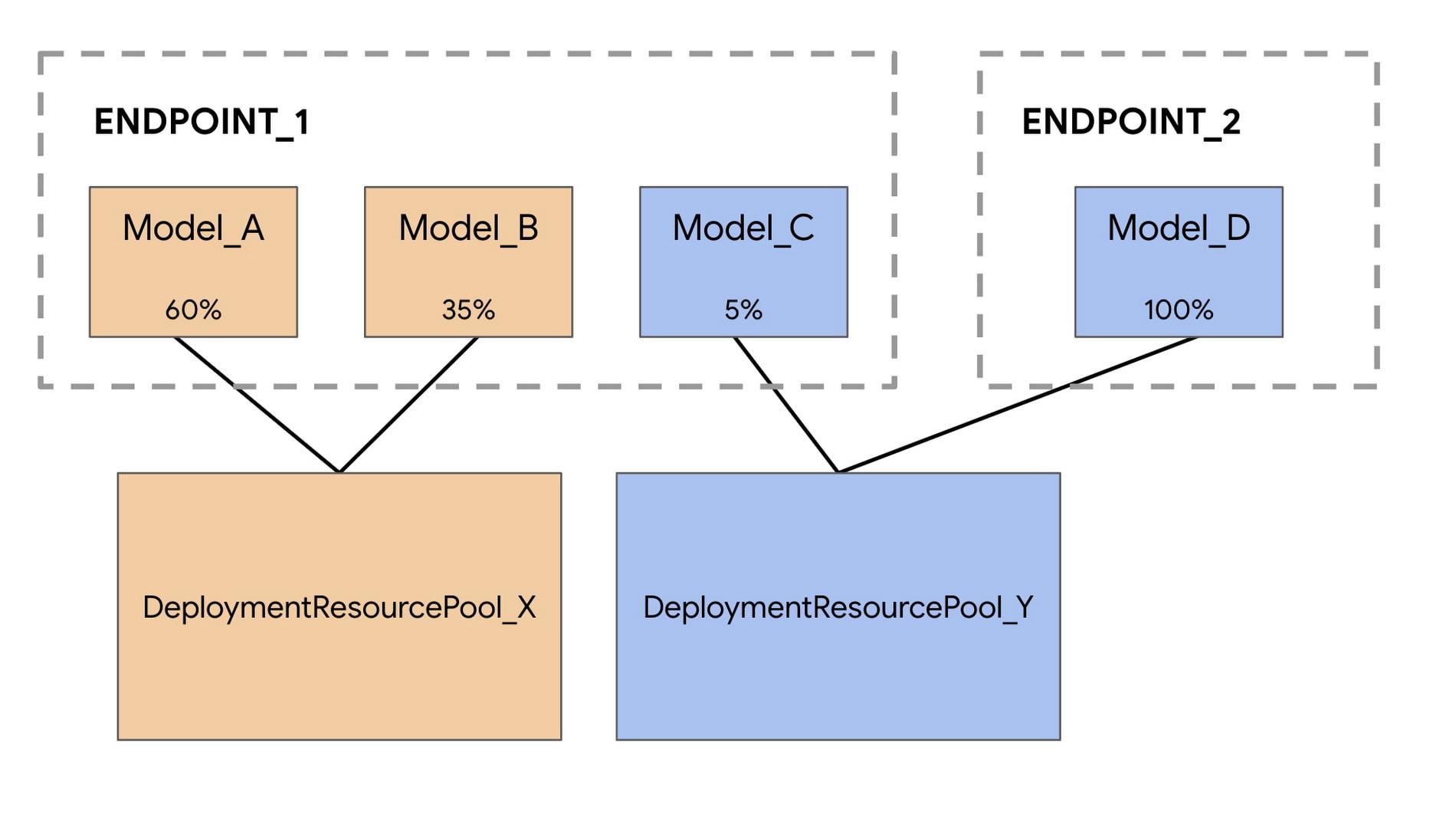

For example, let’s say you have four models and two endpoints, as shown in the image below.

Model_A, Model_B, and Model_C are all deployed to Endpoint_1 with traffic split between them. And Model_D is deployed to Endpoint_2, receiving 100% of the traffic for that endpoint.

Instead of having each model assigned to a separate VM, we can group Model_A and Model_B to share a VM, making them part of DeploymentResourcePool_X. We can also group models that are not on the same endpoint, so Model_C and Model_D can be hosted together in DeploymentResourcePool_Y.

Note that for this first release, models in the same resource pool must also have the same container image and version of the Vertex AI pre-built TensorFlow prediction containers. Other model frameworks and custom containers are not yet supported.

Co-hosting models with Vertex AI Predictions

You can set up model co-hosting in a few steps. The main difference is that you’ll first create a DeploymentResourcePool, and then deploy your model within that pool.

Step 1: Create a DeploymentResourcePool

You can create a DeploymentResourcePool with the following command. There’s no cost associated with this resource until the first model is deployed.

PROJECT_ID={YOUR_PROJECT}REGION="us-central1"VERTEX_API_URL=REGION + "-aiplatform.googleapis.com"VERTEX_PREDICTION_API_URL=REGION + "-prediction-aiplatform.googleapis.com"MULTI_MODEL_API_VERSION="v1beta1"# Give the pool a nameDEPLOYMENT_RESOURCE_POOL_ID="my-resource-pool"CREATE_RP_PAYLOAD = {"deployment_resource_pool":{"dedicated_resources":{"machine_spec":{"machine_type":"n1-standard-4"},"min_replica_count":1,"max_replica_count":2}},"deployment_resource_pool_id":DEPLOYMENT_RESOURCE_POOL_ID}CREATE_RP_REQUEST=json.dumps(CREATE_RP_PAYLOAD)!curl \-X POST \-H "Authorization: Bearer $(gcloud auth print-access-token)" \-H "Content-Type: application/json" \https://{VERTEX_API_URL}/{MULTI_MODEL_API_VERSION}/projects/{PROJECT_ID}/locations/{REGION}/deploymentResourcePools \-d '{CREATE_RP_REQUEST}'

Step 2: Create a model

Models can be imported to the Vertex AI Model Registry at the end of a custom training job, or you can upload them separately if the model artifacts are saved to a Cloud Storage bucket. You can upload a model through the UI or with the SDK using the following command:

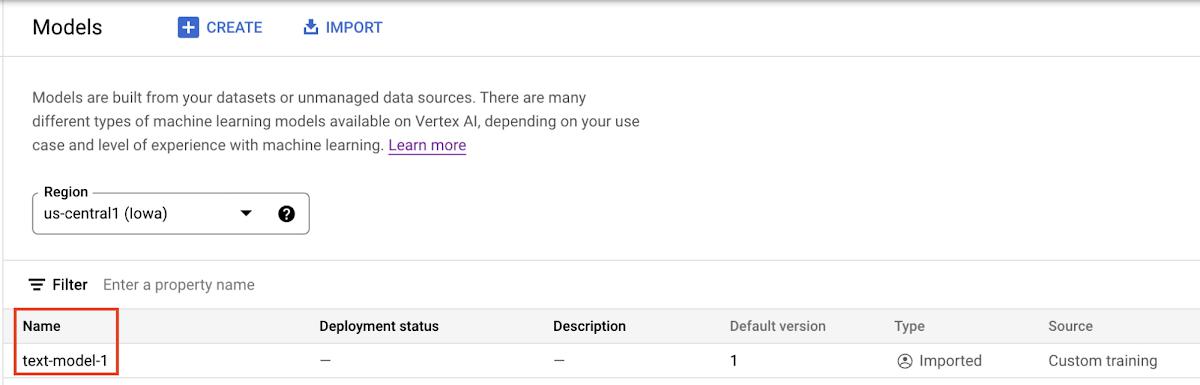

# REPLACE artifact_uri with GCS path to your artifactsmy_model = aiplatform.Model.upload(display_name='text-model-1',artifact_uri=’gs://{YOUR_GCS_BUCKET}’,serving_container_image_uri='us-docker.pkg.dev/vertex-ai/prediction/tf2-cpu.2-7:latest')

When the model is uploaded, you’ll see it in the model registry. Note that the deployment status is empty since the model hasn’t been deployed yet.

Step 3: Create an endpoint

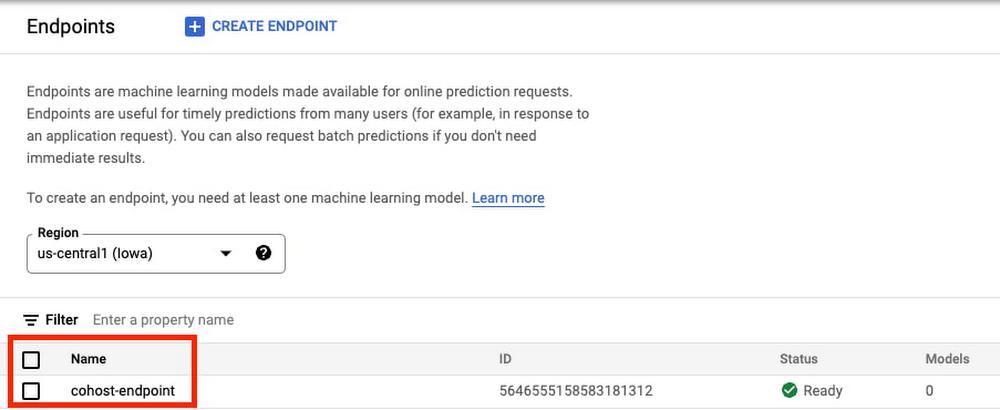

Next, create an endpoint via the SDK or the UI. Note that this is different from deploying a model to an endpoint.

endpoint = aiplatform.Endpoint.create('cohost-endpoint')

When your endpoint is created, you’ll be able to see it in the console.

Step 4: Deploy Model in a Deployment Resource Pool

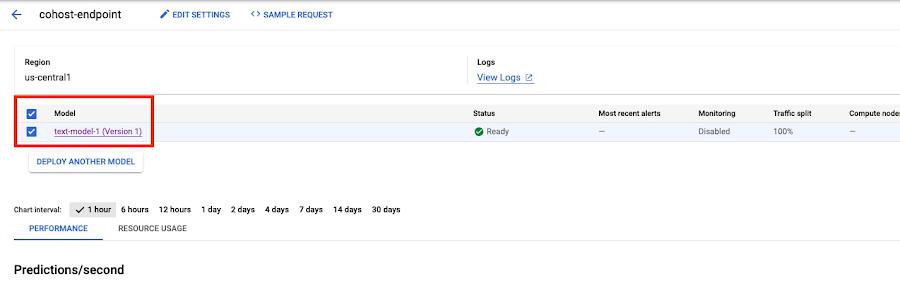

The last step before getting predictions is to deploy the model within the DeploymentResourcePool you created.

MODEL_ID={MODEL_ID}ENDPOINT_ID={ENDPOINT_ID}MODEL_NAME = "projects/{project_id}/locations/{region}/models/{model_id}".format(project_id=PROJECT_ID, region=REGION, model_id=MODEL_ID)SHARED_RESOURCE = "projects/{project_id}/locations/{region}/deploymentResourcePools/{deployment_resource_pool_id}".format(project_id=PROJECT_ID, region=REGION, deployment_resource_pool_id=DEPLOYMENT_RESOURCE_POOL_ID)DEPLOY_MODEL_PAYLOAD = {"deployedModel": {"model": MODEL_NAME,"shared_resources": SHARED_RESOURCE},"trafficSplit": {"0": 100}}DEPLOY_MODEL_REQUEST=json.dumps(DEPLOY_MODEL_PAYLOAD)pp.pprint("DEPLOY_MODEL_REQUEST: " + DEPLOY_MODEL_REQUEST)!curl -X POST \-H "Authorization: Bearer $(gcloud auth print-access-token)" \-H "Content-Type: application/json" \https://{VERTEX_API_URL}/{MULTI_MODEL_API_VERSION}/projects/{PROJECT_ID}/locations/{REGION}/endpoints/{ENDPOINT_ID}:deployModel \-d '{DEPLOY_MODEL_REQUEST}'

When the model is deployed, you’ll see it ready in the console. You can deploy additional models to this same DeploymentResourcePool for co-hosting using the same endpoint we created already, or using a new endpoint.

Step 5: Get a prediction

Once the model is deployed, you can call your endpoint in the same way you’re used to.

x_test= ['The movie was spectacular. Best acting I’ve seen in a long time and a great cast. I would definitely recommend this movie to my friends!']

endpoint.predict(instances=x_test)

What’s next

You now know the basics of how to co-host models on the same VM. For an end to end example, check out this codelab, or refer to the docs for more details. Now it’s time for you to start deploying some models of your own!

By: Nikita Namjoshi (Developer Advocate)

Source: Google Cloud Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!