Regulatory Surveillance of Trading Activity with Google Cloud

This blog post’s focus — a cloud-native pipeline that fits to help meet cost-saving targets, operational efficiencies and demanding evolving regulatory requirements — collaboratively developed by GTS Securities LLC, Strike Technologies LLC (its technology provider) and Google Cloud, is one such mechanism.

From our partners:

GTS is a leading global, electronic market maker that combines market expertise with innovative, proprietary technology. As a quantitative trading firm continually building for the future, GTS leverages the latest in artificial intelligence systems and sophisticated pricing models to bring consistency, efficiency, and transparency to today’s financial markets. GTS accounts for 3-5% of daily cash equities volume in the U.S. and trades over 30,000 different instruments globally, including listed and OTC equities, ETFs, futures, commodities, options, fixed income, foreign exchange, and interest rate products. GTS is the largest Designated Market Maker (DMM) at the New York Stock Exchange, responsible for nearly $12 trillion of market capitalization.

Detecting flashing exceptions

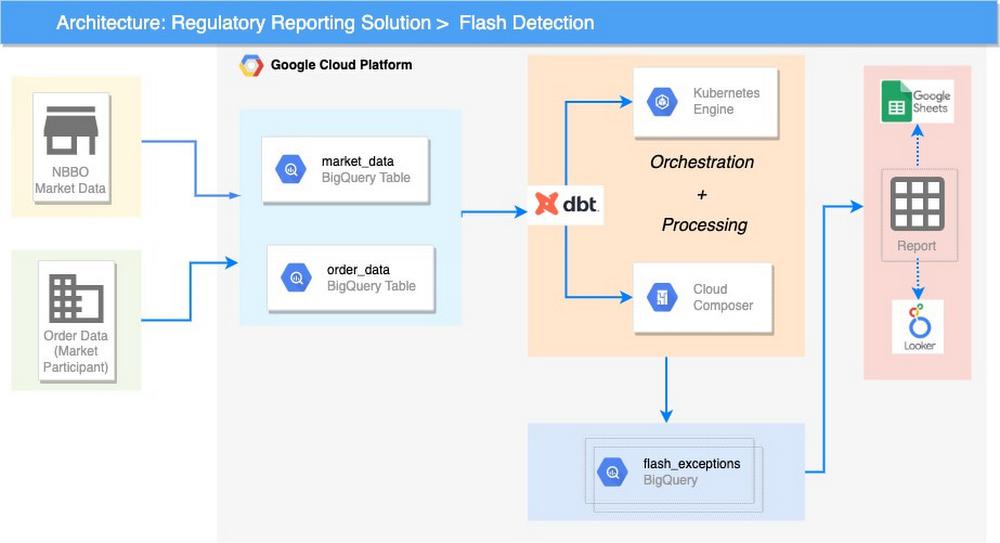

Google Cloud modeled its flashing detection analytics solution after GTS’ post-trade surveillance system, a large-scale simulation framework that marries the firm’s bidirectional trading order data with the high resolution market-data feeds disseminated by the exchanges into a uniform stream. The stream is analyzed by numerous surveillance reports to perform a fully automated regulatory compliance review.

Datasets

- Market Data

- Public high resolution tick data disseminated by the exchanges

- A unidirectional data stream of quotes, trades, symbol trading status, etc.

- These per-exchange feeds are collected, normalized and merged to a per-symbol uniform stream

- For the purposes of this surveillance, the market-wide best bid and ask price (NBBO – National Best Bid / Offer) at any given time are used

- Order Data

- Proprietary order activity of the market participant under review

- A bidirectional data stream initiated by the market participant

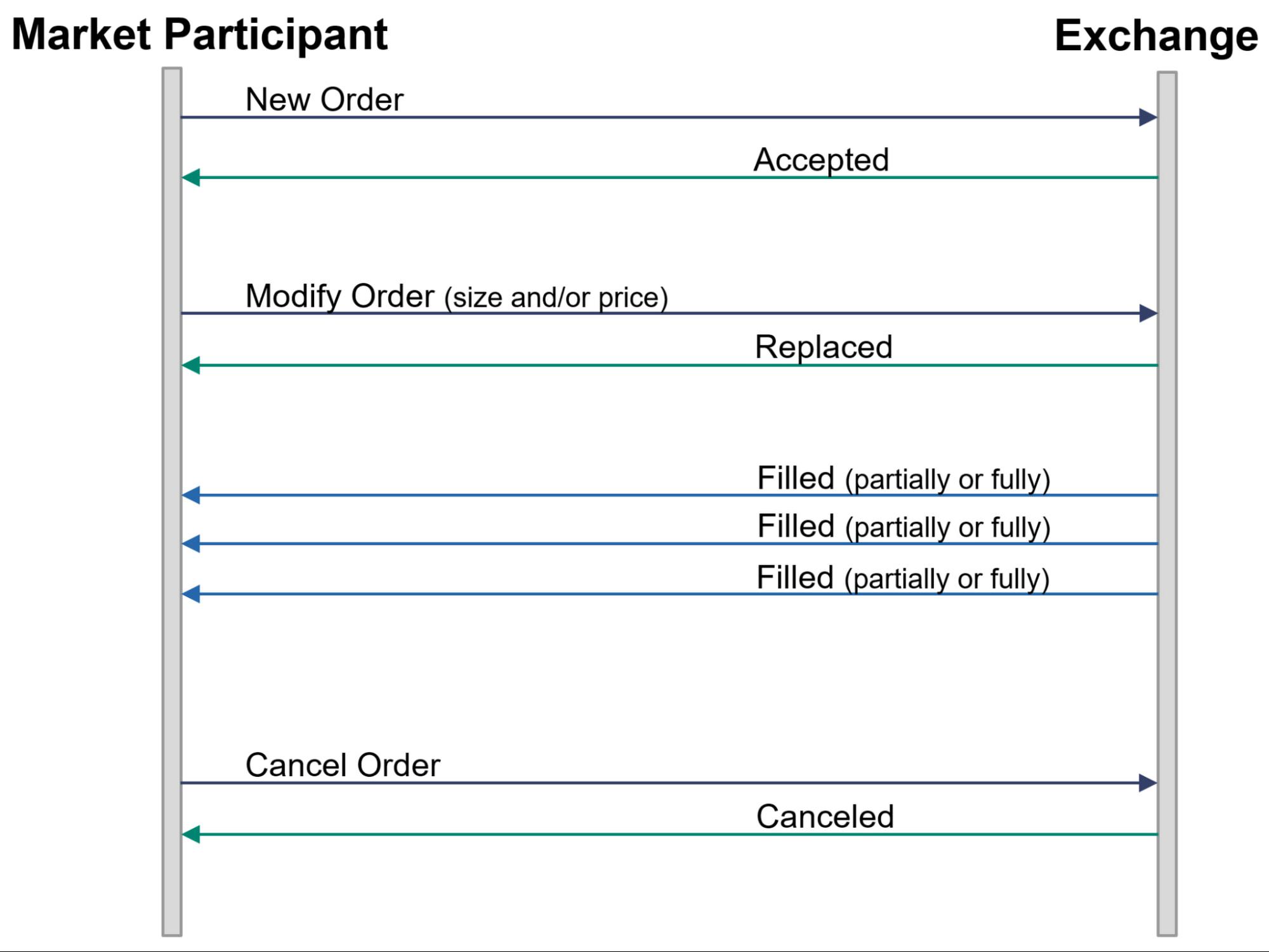

- A simplified life cycle of a single order is illustrated below:

Flashing detection

This surveillance is designed to capture the manipulative practice of placing orders with no trading intention. Rather, these orders are placed on the market to induce a favorable movement.

A flashing activity is composed of a “flash” and “take” events:

- Flash

- Entry of short-lived orders on one side of the market

- These orders are not meant to be executed (filled) just to show an artificial interest on the market, as such they are canceled a short period after being entered

- Note that the flashed order lifespan is a parameter of the surveillance that should be configured to fit the trading profile of the firm under review

- For demonstration purposes we used 500ms (though in practice this can happen over a much smaller timespan)

- Market-movement

- Other market participants react to the flashed order/s, moving the price towards the indicated direction, i.e., causing the NBBO to “improve”

- Take

- Placement of an order/s on the other side of the market that gets executed against other market participants that joined the market at the improved price

- Note that the take order can be “staged” on the market prior to the initiation of the flashing order or entered after it

- Furthermore, the take event should be in proximity to the Flash event

- For demonstration purposes we configured this parameter to 10 seconds

As indicated above, flashing detection requires marrying the order activity of the market participant under review with the public market data, essentially synchronizing two large and discrete nanosecond resolution datasets. In addition to the technical challenge of joining these datasets, we recognize that a trading platform might introduce a small latency when processing large market-data volumes. Further, there might be slight clock differences between the trading-platform and surveillance system’s view of the market-data. To compensate for this difference, the surveillance system employs a windowing mechanism when reviewing the NBBO (National Best Bid and offer) near the take event. The NBBO window length is a configurable parameter of the surveillance and should be set based on the specifics of the trading firm under review. For demonstration purposes we used a 1000ms window. Note that as is the case for discrete data, there might not be any NBBO update within the window. In such cases we carry forward the last NBBO update prior to the window.

Finally, any sequence of events that fit the scenario outlined above are flagged as potential exceptions to be reviewed by the compliance officer of the trading firm.

Modernizing this solution using SQL Analytics on Google Cloud

To implement the above points, the collaboration applies data analytics best practices to a financial services problem. This solution can enable efficient, flexible data processing — but also supports the organizational processes that enable reliable reporting and minimize fire drills.

At the highest level, the steps are executed in containers, which models the macros steps — e.g., load data / execute transform / run data quality. Cloud Composer (Apache Airflow) is the tool that helps orchestrate containers to run BigQuery SQL jobs. As discussed in the next section in detail, these queries are defined using DBT models instead of raw SQL.

DBT is the conversion tool that codifies the regulatory reporting transformations. It runs the SQL logic which implements the surveillance rules required by the regulator as per the rulebook. With DBT, models are parameterised into reusable components and coupled with BigQuery to execute the SQL models. It fits nicely into the stack and helps in creating a CI/CD pipeline that feeds normalized data to BigQuery.

BigQuery is a critical solution component because it can be cost-efficient, offer minimal operational overhead, and solve this high-granularity problem. By cheaply storing, rapidly querying and joining huge, granular datasets, BigQuery helps provide firms with a consistent source of high-quality data. This single source serves multiple report types.

The entire infrastructure in Google Cloud is deployed using Terraform, which is an open-source ‘Infrastructure as a code’ tool. It helps in storing configuration in declarative forms, Kubernetes yaml, etc; which helps promote portability, repeatability, and enable the platform to scale.

Solution overview

Give it a try

This approach is available to help you meet your organization’s reporting needs. Please review our user-guide, whose Tutorials section provides a step-by-step guide to constructing a simple data processing pipeline that can maintain quality of data, auditability, and ease of change and deployment, and also supports the requirements of regulatory reporting.

By: Vishakha Sadhwani (Customer Engineer, Google Cloud) and Victor Zigdon (Director of Trading Analytics, GTS Securities, LLC)

Source: Google Cloud Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!