With the recent release of the VMware Customer Reliability Engineering (CRE) team’s Reliability Scanner, we wanted to take some time to expand on the namespace label check, including how labelling can be used for alert routing.

The Reliability Scanner is a Sonobuoy plugin that allows an end user to include and configure a suggestive set of checks to be executed against a cluster. We can set a check’s configuration to look for a specific label key on a namespace (which will fail if the key does not exist). There is also an option to pull through namespace-configured labels using the `include_labels` configuration option. Doing so will ensure this detail is included in the scans report.

From our partners:

With the ability to run many different applications, delivered by various teams within a single cluster, new challenges arise in this model of shared responsibility. Having a namespace owner present can help identify the teams responsible for components or services within a cluster and provide them with timely signals.

Below is an example of a namespace label check configured to look for an owner key.

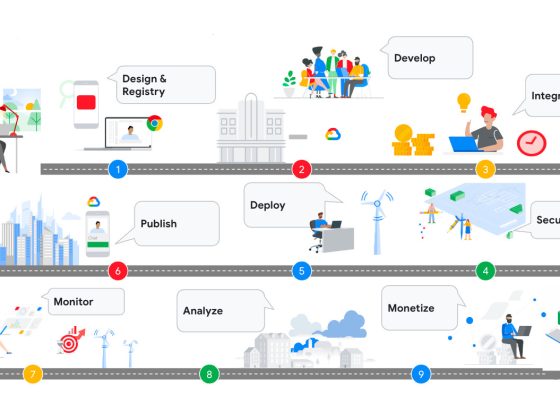

In this post, we’ll take a look at installing VMware Tanzu Kubernetes Grid extensions for monitoring into our existing Tanzu Kubernetes Grid cluster, so that respective namespace owners can be notified when alerts are generated for components in a particular namespace.

Installing Tanzu Kubernetes Grid extensions for monitoring

To make this happen, we will need to first put a few components designed for installing and lifecycling our extensions (and applications) into our workload cluster.

Let’s now create some namespaces and deploy our example application to each of them.

We can now use CURL to verify that our services are up and running.

Next, we will install our Tanzu Kubernetes Grid monitoring extension into the cluster. We’ll start by creating our namespace and any associated roles.

We now have a namespace and the necessary permissions available, so let’s apply our monitoring configuration to the cluster.

First, we need to configure our monitoring values to include our ingress and a trivial Prometheus alert for our service example. We’ll do this by creating a secret with our monitoring configuration data in the target namespace.

We can now install our extension, which our extension manager will handle.

We can use the kubectl watch flag to follow along and watch the installation of the application into our target namespace.

Let’s send some requests to our applications so we can see our metrics coming through. We’ll use `Hey`, a small HTTP load generator, to generate some load to our applications over a 10-second period.

Let’s take a look at our Prometheus UI to observe our metrics as they come through.

We can now update our Prometheus extension to include an alert configuration. The below configuration will create an alerting rule named “example-alerts”. An alert will be created in Prometheus when there is an increase in error rates.

Let’s watch the extension manager pick up on this and trigger a new application deployment.

If we navigate to Status > Rules, we can see our example alerting rule listed.

Based on our defined example alerting rule deployed in the previous step, we are stating that for each namespace, if our `example_operations_failed_count` counter exceeds three failed operations, trigger an alert.

Let’s break team-b’s application and send some requests so we can watch that happen.

We can see, by way of the Prometheus UI, that the number of failed operations has increased to four based on the counter coming from the team-b namespace.

And if we query for `ALERTS`, we will see our team-b namespace alert firing.

Of course, we would not want to use our example alert in a typical scenario, as our counter would continue to grow endlessly. Rather, in most cases, we should use a recording rule in order to define and observe a particular rate sampled at a particular interval.

Now imagine a cluster operator receives one of these alerts for an application’s failed operations. While it’s great information to have, our cluster operator may not have an in-depth understanding of the failing application, and in that case would have to route the alert to the appropriate team for resolution.

Let’s save the cluster operator the unnecessary stress and label our team-a namespace with the appropriate owner details. We can use this metadata later, to create a mapping between any alerts that arise from a particular namespace and route them accordingly.

If we wait the 1-minute interval for the next scrape of our default configured `kube-state-metrics` job, we will see our namespace annotation come through as a label for the metric. We can also see that our alert now has the owner label defined.

Enriching our alert with metadata can be extremely useful, as we are now able to route signals based on priority and/or ownership to the correct team. For example, a cluster operator many find such tailored information useful. That said, depending on their role, they may want to be made aware of failures across a broader set of services within a cluster.

What’s next?

Having the ability to route specific alerts can assist teams managing clusters with getting the right signal to the appropriate owner and reduce alert fatigue for the wider audience. Stay tuned for the next blog post in this series, in which we will walk through how to configure Alertmanager for alert routing.

VMware CRE is a team of Site Reliability Engineers and Program Managers who work together with Tanzu customers and partner teams to learn and apply reliability engineering practices using our Tanzu portfolio of services. As part of our product engineering organization, VMware CRE is responsible for some reliability engineering-related features for Tanzu. We are also in the escalation path for our technical support teams to help our customers meet their reliability goals.

Alexandra McCoy, Peri Thompson, and Peter Grant contributed to this post.

Source VMware Tanzu Blog

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!