Cloud Tasks is a fully-managed service that manages the execution, dispatch, and asynchronous delivery of a large number of tasks to App Engine or any arbitrary HTTP endpoint. You can also use a Cloud Tasks queue to buffer requests between services for more robust intra-service communication.

Cloud Tasks introduces two new features, the new queue-level routing configuration and BufferTask API. Together, they enable creating HTTP tasks and adding to a queue without needing the Tasks client library.

From our partners:

Cloud Tasks recap

Before we talk about the new features, let’s do a little recap. In Cloud Tasks, independent tasks, such as an HTTP request, are added to a queue and persist until they are asynchronously processed by an App Engine app or any arbitrary HTTP endpoint.

Cloud Tasks comes with a number of resiliency features such as:

- Task deduplication: Tasks added multiple times are dispatched once.

- Guaranteed delivery: Tasks are guaranteed to be delivered at least once and typically, delivered exactly once.

- Rate and retry controls: Control the execution by setting the rate at which tasks are dispatched, the maximum number of attempts, and the minimum amount of time to wait between attempts.

- Future scheduling: Control the time a task is run.

What are HTTP target tasks?

In Cloud Tasks, you can create and add HTTP tasks to target any HTTP service running on Compute Engine, Google Kubernetes Engine, Cloud Run, Cloud Functions, or on-premises systems. By adding an HTTP task to a queue, you get Cloud Tasks to handle deduplication, guaranteed delivery, and so on.

This is great, but it comes with a caveat. The caller must use the Cloud Tasks client library to wrap the HTTP requests into tasks and add them to a queue. For example, the C# sample shows how to wrap an HTTP request into a Task and a TaskRequest before adding to the queue:

var taskRequest = new CreateTaskRequest

{

Parent = new QueueName(projectId, location, queue).ToString(),

Task = new Task

{

HttpRequest = new HttpRequest

{

HttpMethod = HttpMethod.Get,

Url = url

}

}

};

var client = CloudTasksClient.Create();

var response = client.CreateTask(taskRequest);

This puts the burden of creating tasks on the caller, and wrapping the HTTP request into a task creates an unnecessary dependency between callers and the Tasks client library. Also, the burden of creating the task should really be on the target service, rather than the caller, as the target service is the one benefiting from the queue. The new queue-level routing configuration and the BufferTask API address this problem and provide an easier way to create tasks. Let’s take a closer look.

What is queue-level routing configuration?

Queue-level task routing configuration changes the HTTP task routing for the entire queue for all new and pending tasks. This allows easier creation of tasks as the HTTP target doesn’t need to be set at the task level. It shifts more control to the service provider, which is in a better position to set the target of all tasks in a queue (such as routing traffic to a different backend if the original backend is unavailable).

Create a queue with a routing configuration to add or override to the target URI as follows:

gcloud beta tasks queues create $QUEUE \

--http-uri-override=host:$SERVICE2_HOST \

--location=$LOCATION

Now, whether the caller specifies a target URI or not, the queue redirects those tasks to the URI specified at the queue level.

The queue-level routing configuration is also useful when you need to change the HTTP URI of all pending tasks in a queue, such as the target service goes down, and you must quickly route to another service.

At this point, you might be wondering, “How does this help with easier HTTP tasks?” That’s a good question, and it takes us to our next topic, which is the new BufferTask API.

What is the BufferTask API?

BufferTask is a new API that allows callers to create an HTTP task without needing to provide any task configuration (HTTP URL, headers, authorization). The caller simply sends a regular HTTP request to the Buffer API. The Buffer API wraps the HTTP request into an HTTP task using the defaults in the queue-level routing configuration.

BufferTask API enables easier integration with services. Cloud Tasks can now be deployed in front of services without needing any code changes on the caller side. Any arbitrary HTTP request sent to the BufferTask API is wrapped as a task and delivered to the destination set at the queue level.

To use the BufferTask API, the queue must have the Target URI configuration set. In other words, the queue-level routing configuration feature is a prerequisite for using the BufferTask API.

The following curl sample is the previous C# sample modified to create an HTTP task with the BufferTask API. Notice how it’s a simple a HTTP POST request with a JSON body:

curl -X POST https://cloudtasks.googleapis.com/v2beta3/projects/$PROJECT_ID/locations/$LOCATION/queues/$QUEUE//tasks:buffer \

-H "Authorization: Bearer $ACCESS_TOKEN" \

-d '{"message": "Hello World"}'

Creating HTTP tasks with client libraries is simpler too. For example, in this C# sample, an HTTP GET request is sent directly to the BufferTask API. The request isn’t wrapped in a Task and TaskRequest and doesn’t require the client-library for Cloud Tasks at all:

var BufferTaskApiUrl = $"https://cloudtasks.googleapis.com/v2beta3/projects/{ProjectId}/locations/{Location}/queues/{Queue}/tasks:buffer";

using (var client = new HttpClient())

{

client.DefaultRequestHeaders.Add("Authorization", $"Bearer {AccessToken}");

var response = await client.GetAsync(BufferTaskApiUrl);

var content = await response.Content.ReadAsStringAsync();

Console.WriteLine($"Response: {content}");

}

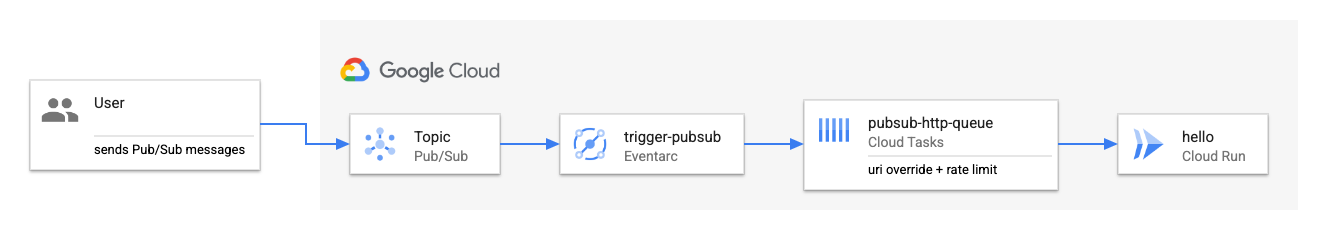

Cloud Tasks as a buffer between Pub/Sub and Cloud Run

Let’s take a look at a concrete example of Cloud Tasks as a buffer between services and how the new queue-level routing configuration and BufferTask API features help.

Imagine you have an application that publishes messages to a Pub/Sub topic managed by Eventarc, and Eventarc routes those messages to a Cloud Run service.

Suppose the app generates too many messages, and you want to apply a rate limit. Applying a rate limit prevents excessive autoscaling of the Cloud Run service. In this case, you can integrate a Cloud Tasks queue as a buffer to throttle the load.

To apply the rate limit and to avoid overwhelming the service, do the following:

- Create a Cloud Tasks queue with a routing configuration and URI override to point to the Cloud Run service.

- Set a rate limit to apply on the queue, such as 1 request/second.

- Change the Pub/Sub subscription endpoint to point to the BufferTask API.

Voila! You have a queue in the middle of your pipeline that’s throttling messages to your service at the rate you defined with no code or complex configuration. You can check out the details of how to set this up in our sample on GitHub. This pattern applies to any two services that can send HTTP requests to the BufferTask API and receive HTTP requests from Cloud Tasks.

This wraps up our discussion on Cloud Tasks and its new features. As usual, feel free to reach out to me on Twitter @meteatamel.

By Mete Atamel, Developer Advocate

Originally published at Google Cloud

Source: Cyberpogo

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!